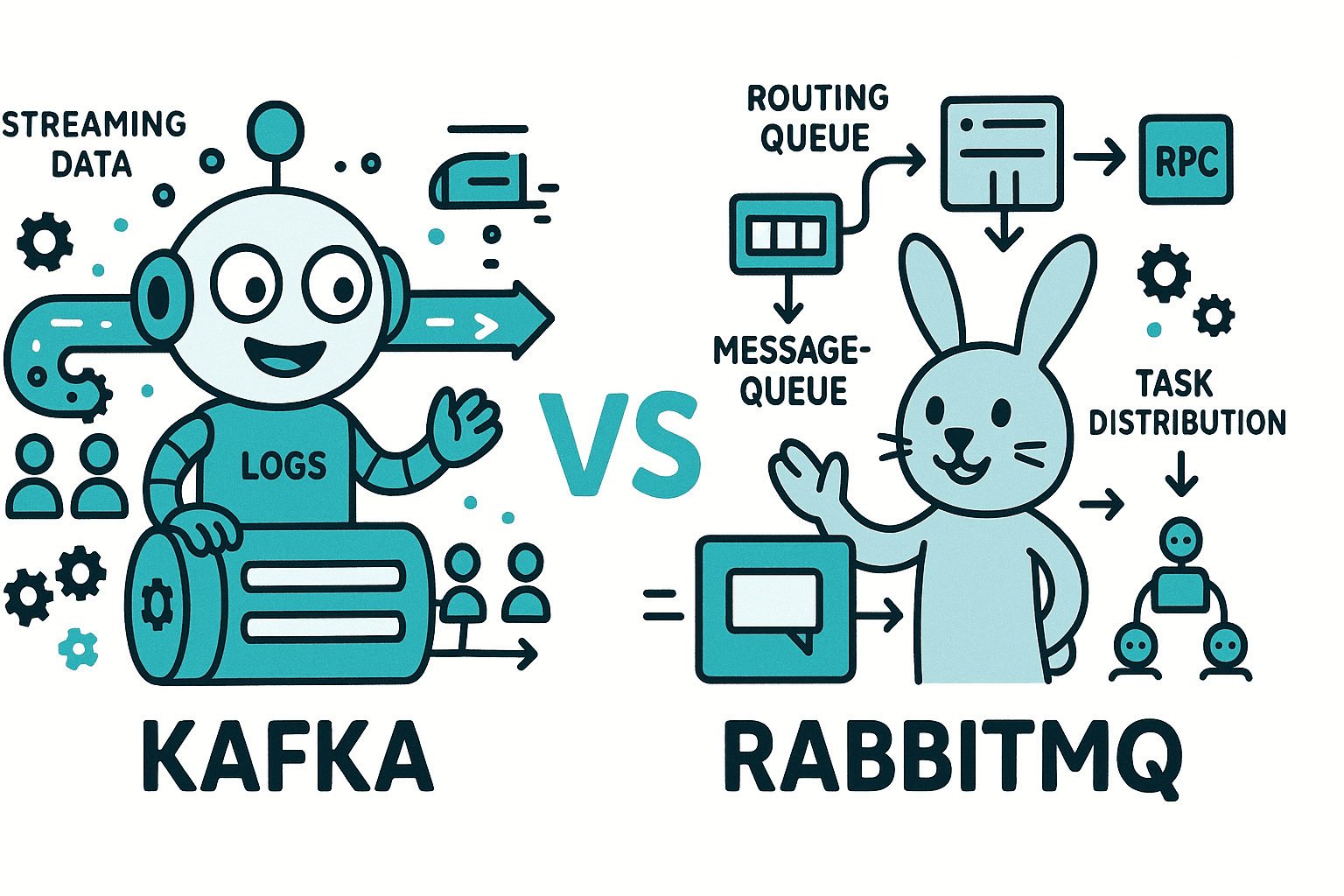

Choosing between Apache Kafka and RabbitMQ is one of the most critical decisions when designing a distributed system. While both are powerful messaging solutions, they serve fundamentally different purposes and excel in different scenarios. This comprehensive guide will help you understand the key differences, use cases, and when to use each technology.

What is Apache Kafka?

Apache Kafka is a distributed event streaming platform designed for high-throughput, fault-tolerant, and real-time data pipelines. Originally developed by LinkedIn in 2011 and later open-sourced, Kafka treats messages as an immutable log of events that can be replayed and consumed by multiple consumers.

Key Characteristics of Kafka

- Distributed commit log: Messages are stored as an append-only log

- High throughput: Capable of handling millions of messages per second

- Horizontal scalability: Easily scales by adding more brokers

- Message persistence: Messages are stored on disk and retained based on configurable policies

- Pull-based consumption: Consumers pull messages at their own pace

- Event streaming: Designed for continuous data flows and stream processing

What is RabbitMQ?

RabbitMQ is a traditional message broker that implements the Advanced Message Queuing Protocol (AMQP). Created in 2007, RabbitMQ focuses on reliable message delivery between producers and consumers with sophisticated routing capabilities.

Key Characteristics of RabbitMQ

- Traditional message broker: Implements queue-based messaging patterns

- Smart broker, dumb consumer: Broker handles routing logic and delivery guarantees

- Push-based delivery: Messages are pushed to consumers

- Complex routing: Supports exchanges, bindings, and routing keys

- Message acknowledgment: Strong delivery guarantees with ack/nack mechanisms

- Multiple protocols: Supports AMQP, STOMP, MQTT, and more

Core Architecture Differences

Kafka Architecture

Kafka uses a distributed log architecture:

Producer → Topic (Partitions) → Consumer Groups

↓

Disk Storage (Retained)

Components:

- Topics: Categories for messages

- Partitions: Ordered, immutable sequences of messages within a topic

- Brokers: Kafka servers that store and serve data

- ZooKeeper/KRaft: Cluster coordination (KRaft replacing ZooKeeper in newer versions)

- Consumer Groups: Groups of consumers that parallelize message processing

Example Kafka Configuration:

# Kafka Topic with 3 partitions

Topic: user-events

Partitions: 3

Replication Factor: 3

Retention Period: 7 days

RabbitMQ Architecture

RabbitMQ uses a queue-based architecture:

Producer → Exchange → Queue → Consumer

↓

(Routing Rules)

Components:

- Exchanges: Route messages to queues (direct, fanout, topic, headers)

- Queues: Store messages until consumed

- Bindings: Rules connecting exchanges to queues

- Consumers: Receive and process messages

Example RabbitMQ Configuration:

# RabbitMQ Exchange and Queue Setup

exchange = 'user_events'

exchange_type = 'topic'

queue = 'user_registration_queue'

routing_key = 'user.registered.*'

Performance Comparison

Throughput

Kafka:

- Writes: 1M+ messages/second per broker

- Reads: 2M+ messages/second per broker

- Optimized for high-volume, sequential I/O

RabbitMQ:

- Writes: 10K-100K messages/second per node

- Reads: 10K-100K messages/second per node

- Better for lower volume with complex routing

Latency

Kafka:

- End-to-end latency: 5-50ms

- Higher latency due to batching and disk writes

- Optimized for throughput over latency

RabbitMQ:

- End-to-end latency: 1-10ms

- Lower latency for individual messages

- Optimized for fast message delivery

Storage

Kafka:

- Messages persisted to disk by default

- Retention based on time or size policies

- Can store terabytes of data

- Consumers can replay messages

RabbitMQ:

- Messages stored in memory (by default)

- Deleted after consumption

- Optional persistence with performance trade-off

- No native message replay

Message Consumption Models

Kafka: Pull-Based Model

Consumers pull messages from Kafka at their own pace:

# Kafka Consumer Example (Python with kafka-python)

from kafka import KafkaConsumer

consumer = KafkaConsumer(

'user-events',

bootstrap_servers=['localhost:9092'],

group_id='user-service-group',

enable_auto_commit=False,

value_deserializer=lambda m: m.decode('utf-8')

)

for message in consumer:

process_user_event(message.value)

consumer.commit() # Manual commit

// Kafka Consumer Example (C# with Confluent.Kafka)

using Confluent.Kafka;

var config = new ConsumerConfig

{

BootstrapServers = "localhost:9092",

GroupId = "user-service-group",

EnableAutoCommit = false,

AutoOffsetReset = AutoOffsetReset.Earliest

};

using var consumer = new ConsumerBuilder<string, string>(config).Build();

consumer.Subscribe("user-events");

while (true)

{

var consumeResult = consumer.Consume(TimeSpan.FromMilliseconds(100));

if (consumeResult != null)

{

ProcessUserEvent(consumeResult.Message.Value);

consumer.Commit(consumeResult); // Manual commit

}

}

Advantages:

- Consumers control processing rate

- Natural backpressure handling

- Easy to replay messages

- Better for batch processing

RabbitMQ: Push-Based Model

RabbitMQ pushes messages to consumers:

# RabbitMQ Consumer Example (Python with pika)

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue='user_events')

def callback(ch, method, properties, body):

print(f"Processing: {body}")

process_user_event(body)

ch.basic_ack(delivery_tag=method.delivery_tag)

channel.basic_qos(prefetch_count=1)

channel.basic_consume(queue='user_events', on_message_callback=callback)

channel.start_consuming()

// RabbitMQ Consumer Example (C# with RabbitMQ.Client)

using RabbitMQ.Client;

using RabbitMQ.Client.Events;

using System.Text;

var factory = new ConnectionFactory() { HostName = "localhost" };

using var connection = factory.CreateConnection();

using var channel = connection.CreateModel();

channel.QueueDeclare(queue: "user_events",

durable: false,

exclusive: false,

autoDelete: false,

arguments: null);

channel.BasicQos(prefetchSize: 0, prefetchCount: 1, global: false);

var consumer = new EventingBasicConsumer(channel);

consumer.Received += (model, ea) =>

{

var body = ea.Body.ToArray();

var message = Encoding.UTF8.GetString(body);

Console.WriteLine($"Processing: {message}");

ProcessUserEvent(message);

channel.BasicAck(deliveryTag: ea.DeliveryTag, multiple: false);

};

channel.BasicConsume(queue: "user_events", autoAck: false, consumer: consumer);

Advantages:

- Lower latency for individual messages

- Immediate delivery

- Built-in flow control

- Better for real-time processing

Use Cases and When to Choose Each

When to Use Kafka

1. Event Sourcing and Stream Processing

Use Case: Building an e-commerce platform where every user action needs to be captured and processed.

# Python: E-commerce Event Stream

# Topic: user-activity

# Events:

# - user.viewed_product

# - user.added_to_cart

# - user.completed_purchase

# - user.left_review

# Multiple consumers can process the same events

# Consumer Group 1: Analytics Service (calculates metrics)

# Consumer Group 2: Recommendation Engine (builds user profiles)

# Consumer Group 3: Audit Service (compliance logging)

from kafka import KafkaProducer, KafkaConsumer

import json

# Producer publishing user events

producer = KafkaProducer(

bootstrap_servers=['localhost:9092'],

value_serializer=lambda v: json.dumps(v).encode('utf-8')

)

# Publish events

producer.send('user-activity', {'event': 'user.viewed_product', 'userId': 123, 'productId': 456})

producer.send('user-activity', {'event': 'user.added_to_cart', 'userId': 123, 'productId': 456})

// C#: E-commerce Event Stream

using Confluent.Kafka;

using System.Text.Json;

var config = new ProducerConfig { BootstrapServers = "localhost:9092" };

using var producer = new ProducerBuilder<string, string>(config).Build();

// Publish events to user-activity topic

var viewedEvent = new { Event = "user.viewed_product", UserId = 123, ProductId = 456 };

producer.Produce("user-activity", new Message<string, string>

{

Key = "123",

Value = JsonSerializer.Serialize(viewedEvent)

});

var cartEvent = new { Event = "user.added_to_cart", UserId = 123, ProductId = 456 };

producer.Produce("user-activity", new Message<string, string>

{

Key = "123",

Value = JsonSerializer.Serialize(cartEvent)

});

Why Kafka?

- Events need to be replayed for analytics

- Multiple services need the same data

- High volume of events (thousands per second)

- Historical data analysis required

2. Log Aggregation

Use Case: Collecting logs from hundreds of microservices.

# Centralized Logging Pipeline

Services (100+) → Kafka Topic: application-logs →

├→ Elasticsearch (search/visualization)

├→ S3 (long-term storage)

└→ Alert Service (real-time monitoring)

Why Kafka?

- Handles massive log volumes

- Decouples log producers from consumers

- Reliable buffering during consumer outages

- Retention allows debugging historical issues

3. Real-Time Data Pipelines

Use Case: IoT platform processing sensor data.

# IoT Data Pipeline

Sensors (10,000+) → Kafka Topic: sensor-readings →

├→ Real-time Dashboard (last 5 minutes)

├→ Anomaly Detection (streaming ML)

├→ Data Lake (batch analytics)

└→ Alert System (threshold monitoring)

Why Kafka?

- High throughput for IoT data

- Multiple consumers with different processing speeds

- Data retention for batch processing

- Exactly-once semantics for critical data

4. Change Data Capture (CDC)

Use Case: Syncing database changes across systems.

-- Database changes captured via Kafka Connect

Source: PostgreSQL (users table) →

Kafka Topic: db.users.changes →

├→ Elasticsearch (search index)

├→ Redis (cache invalidation)

└→ Analytics Database (reporting)

Why Kafka?

- Reliable capture of all database changes

- Ordered delivery per partition

- Multiple downstream systems

- Replay capability for rebuilding indexes

When to Use RabbitMQ

1. Task Distribution and Worker Queues

Use Case: Image processing service with background workers.

# Image Processing Queue

Upload Service → RabbitMQ Queue: image-processing →

Worker Pool (5 workers):

- Resize image

- Generate thumbnails

- Apply filters

- Update database

Why RabbitMQ?

- Simple task distribution

- Automatic load balancing across workers

- Acknowledgments ensure no task is lost

- Priority queues for urgent tasks

2. Request/Reply Patterns

Use Case: Microservices making RPC-style calls.

# Python: Order Service requesting inventory check

import pika

import json

import uuid

# RabbitMQ RPC Client

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

# Declare reply queue

result = channel.queue_declare(queue='', exclusive=True)

callback_queue = result.method.queue

# Send RPC request

correlation_id = str(uuid.uuid4())

channel.basic_publish(

exchange='',

routing_key='inventory_rpc',

properties=pika.BasicProperties(

reply_to=callback_queue,

correlation_id=correlation_id

),

body=json.dumps({'productId': 123, 'quantity': 2})

)

// C#: Order Service requesting inventory check

using RabbitMQ.Client;

using System.Text;

using System.Text.Json;

var factory = new ConnectionFactory() { HostName = "localhost" };

using var connection = factory.CreateConnection();

using var channel = connection.CreateModel();

// Declare reply queue

var replyQueue = channel.QueueDeclare().QueueName;

var correlationId = Guid.NewGuid().ToString();

// Send RPC request

var message = new { ProductId = 123, Quantity = 2 };

var body = Encoding.UTF8.GetBytes(JsonSerializer.Serialize(message));

var properties = channel.CreateBasicProperties();

properties.CorrelationId = correlationId;

properties.ReplyTo = replyQueue;

channel.BasicPublish(

exchange: "",

routingKey: "inventory_rpc",

basicProperties: properties,

body: body

);

Why RabbitMQ?

- Built-in RPC pattern support

- Temporary reply queues

- Correlation IDs for matching requests/responses

- Timeout handling

3. Complex Message Routing

Use Case: Notification system with multiple channels.

# Notification Routing

Producer → Topic Exchange: notifications →

Bindings:

- email.* → email_queue

- sms.urgent.* → sms_priority_queue

- push.*.* → push_notification_queue

- *.urgent.* → ops_alert_queue

Why RabbitMQ?

- Flexible routing with topic exchanges

- Multiple routing patterns

- Easy to add new routing rules

- Priority queues for urgent notifications

4. Legacy System Integration

Use Case: Integrating modern microservices with legacy systems.

Modern API → RabbitMQ → Legacy System (SOAP/XML)

├→ Transform messages

├→ Retry failed deliveries

└→ Dead letter queue for failures

Why RabbitMQ?

- Protocol flexibility (AMQP, STOMP, MQTT)

- Message transformation plugins

- Built-in retry and dead letter queues

- Better for lower volume integrations

Real-World Architecture Examples

Example 1: E-Commerce Platform (Hybrid Approach)

# Using Both Kafka and RabbitMQ

Kafka:

- User activity events (analytics)

- Product inventory changes (CDC)

- Order history (event sourcing)

RabbitMQ:

- Order processing tasks

- Email notifications

- Payment processing (RPC)

- Image uploads (worker queues)

Why Both?

- Kafka: High-volume events, analytics, data history

- RabbitMQ: Task execution, immediate notifications, RPC

Example 2: Financial Trading Platform (Kafka)

# Python: High-frequency trading system

# Kafka Topics:

# - market-data-feed (1M+ msgs/sec)

# - trade-orders

# - execution-reports

# - risk-metrics

from kafka import KafkaProducer, KafkaConsumer

from kafka.admin import KafkaAdminClient, NewTopic

# Create topics for trading system

admin_client = KafkaAdminClient(bootstrap_servers=['localhost:9092'])

topics = [

NewTopic(name='market-data-feed', num_partitions=10, replication_factor=3),

NewTopic(name='trade-orders', num_partitions=5, replication_factor=3),

NewTopic(name='execution-reports', num_partitions=5, replication_factor=3),

NewTopic(name='risk-metrics', num_partitions=3, replication_factor=3)

]

# Stream Processing:

# - Real-time risk calculations

# - Market data aggregation

# - Audit trail (compliance)

# - Analytics (replay historical data)

// C#: High-frequency trading system

using Confluent.Kafka;

using Confluent.Kafka.Admin;

var adminConfig = new AdminClientConfig { BootstrapServers = "localhost:9092" };

using var adminClient = new AdminClientBuilder(adminConfig).Build();

// Create topics for trading system

var topics = new List<TopicSpecification>

{

new TopicSpecification { Name = "market-data-feed", NumPartitions = 10, ReplicationFactor = 3 },

new TopicSpecification { Name = "trade-orders", NumPartitions = 5, ReplicationFactor = 3 },

new TopicSpecification { Name = "execution-reports", NumPartitions = 5, ReplicationFactor = 3 },

new TopicSpecification { Name = "risk-metrics", NumPartitions = 3, ReplicationFactor = 3 }

};

// Stream Processing:

// - Real-time risk calculations

// - Market data aggregation

// - Audit trail (compliance)

// - Analytics (replay historical data)

Why Only Kafka?

- Extreme throughput requirements

- Strict ordering guarantees

- Regulatory compliance (message retention)

- Complex stream processing

Example 3: Microservices Communication (RabbitMQ)

# Microservices with < 10K msgs/sec

Services:

- User Service → user.events exchange

- Order Service → order.events exchange

- Notification Service → notification.queue

- Payment Service → payment.rpc.queue

Patterns:

- Pub/Sub for events

- RPC for synchronous calls

- Work queues for background jobs

Why Only RabbitMQ?

- Moderate message volume

- Complex routing requirements

- RPC patterns needed

- Simpler operational overhead

Operational Considerations

Kafka Operations

Pros:

- Highly scalable horizontally

- Excellent fault tolerance

- Strong community and ecosystem

- Great monitoring tools (Confluent Control Center, Kafka Manager)

Cons:

- More complex to set up and manage

- Requires ZooKeeper (or KRaft in newer versions)

- Higher resource requirements

- Steeper learning curve

Monitoring Metrics:

Key Kafka Metrics:

- Under-replicated partitions

- Consumer lag

- Broker CPU/disk usage

- Request latency

- Network throughput

RabbitMQ Operations

Pros:

- Easier to set up and configure

- Lower resource requirements

- Good management UI

- Extensive plugin ecosystem

Cons:

- Vertical scaling challenges

- Clustering complexity

- Memory management critical

- Queue mirroring overhead

Monitoring Metrics:

Key RabbitMQ Metrics:

- Queue depth

- Message rates (publish/deliver)

- Memory usage

- Connection count

- Unacknowledged messages

Performance Optimization Tips

Kafka Optimization

# Producer Configuration

acks=all # Reliability

compression.type=lz4 # Reduce network bandwidth

batch.size=16384 # Batch messages

linger.ms=10 # Wait for batching

buffer.memory=33554432 # Buffer size

# Consumer Configuration

fetch.min.bytes=1024 # Minimum fetch size

fetch.max.wait.ms=500 # Max wait time

max.poll.records=500 # Records per poll

# Broker Configuration

num.network.threads=8 # Network threads

num.io.threads=8 # I/O threads

log.segment.bytes=1073741824 # Segment size

log.retention.hours=168 # 7 days retention

RabbitMQ Optimization

# rabbitmq.conf

vm_memory_high_watermark.relative = 0.6

disk_free_limit.absolute = 50GB

channel_max = 2048

heartbeat = 60

# Python Producer Optimization

channel.confirm_delivery() # Publisher confirms

channel.basic_publish(

exchange='',

routing_key='queue',

body=message,

properties=pika.BasicProperties(

delivery_mode=2, # Persistent messages

),

mandatory=True # Return if unroutable

)

Cost Considerations

Kafka Costs

Infrastructure:

- Higher CPU and memory requirements

- Significant disk storage (retention)

- ZooKeeper cluster overhead

- Typical: 3-5 brokers minimum for production

Managed Services:

- Confluent Cloud: $0.10-$0.15/GB ingress

- AWS MSK: $0.05-$0.10/GB + instance costs

- Azure Event Hubs: $0.028/million operations

RabbitMQ Costs

Infrastructure:

- Lower resource requirements

- Primarily memory-based

- Typical: 2-3 nodes for HA

Managed Services:

- CloudAMQP: $19-$399/month (by plan)

- AWS MQ: $0.30/hour + storage

- Azure Service Bus: $0.05/million operations

Migration Considerations

Migrating from RabbitMQ to Kafka

When to Consider:

- Message volume exceeds 100K msgs/sec

- Need for message replay

- Building data pipelines

- Stream processing requirements

Migration Strategy:

Phase 1: Dual Write

- Write to both RabbitMQ and Kafka

- Validate data consistency

Phase 2: Gradual Consumer Migration

- Move non-critical consumers to Kafka

- Monitor and validate

Phase 3: Complete Migration

- Move all consumers to Kafka

- Deprecate RabbitMQ

Migrating from Kafka to RabbitMQ

When to Consider:

- Overcomplicated for current needs

- Need complex routing

- RPC patterns required

- Cost reduction (for low volume)

Note: This is rare; usually indicates initial over-engineering.

Common Pitfalls and Solutions

Kafka Pitfalls

Pitfall 1: Consumer Lag

# Problem: Consumers can't keep up

# Solution: Increase partitions and consumer instances

# Check consumer lag

kafka-consumer-groups.sh --bootstrap-server localhost:9092 \

--group my-group --describe

# Scale consumers to match partition count

Partitions: 12 → Consumer Instances: 12

Pitfall 2: Wrong Partition Key

# Python: Bad vs Good partition key usage

from kafka import KafkaProducer

producer = KafkaProducer(bootstrap_servers=['localhost:9092'])

# Bad: Uneven distribution

producer.send('topic', key=b'constant-key', value=value)

# Good: Even distribution

producer.send('topic', key=user_id.encode('utf-8'), value=value)

// C#: Bad vs Good partition key usage

using Confluent.Kafka;

var config = new ProducerConfig { BootstrapServers = "localhost:9092" };

using var producer = new ProducerBuilder<string, string>(config).Build();

// Bad: Uneven distribution

producer.Produce("topic", new Message<string, string>

{

Key = "constant-key",

Value = value

});

// Good: Even distribution

producer.Produce("topic", new Message<string, string>

{

Key = userId,

Value = value

});

RabbitMQ Pitfalls

Pitfall 1: Memory Issues

# Python: Problem: Queues grow unbounded

# Solution: Set queue length limits

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(

queue='my_queue',

arguments={

'x-max-length': 10000,

'x-overflow': 'reject-publish'

}

)

// C#: Problem: Queues grow unbounded

// Solution: Set queue length limits

using RabbitMQ.Client;

var factory = new ConnectionFactory() { HostName = "localhost" };

using var connection = factory.CreateConnection();

using var channel = connection.CreateModel();

var arguments = new Dictionary<string, object>

{

{ "x-max-length", 10000 },

{ "x-overflow", "reject-publish" }

};

channel.QueueDeclare(queue: "my_queue",

durable: false,

exclusive: false,

autoDelete: false,

arguments: arguments);

Pitfall 2: Unacknowledged Messages

# Python: Bad vs Good acknowledgment handling

import pika

# Bad: Forgot to acknowledge

def callback_bad(ch, method, properties, body):

process(body)

# Missing: ch.basic_ack(method.delivery_tag)

# Good: Always acknowledge

def callback_good(ch, method, properties, body):

try:

process(body)

ch.basic_ack(method.delivery_tag)

except Exception:

ch.basic_nack(method.delivery_tag, requeue=True)

// C#: Bad vs Good acknowledgment handling

using RabbitMQ.Client;

using RabbitMQ.Client.Events;

var factory = new ConnectionFactory() { HostName = "localhost" };

using var connection = factory.CreateConnection();

using var channel = connection.CreateModel();

// Bad: Forgot to acknowledge

var badConsumer = new EventingBasicConsumer(channel);

badConsumer.Received += (model, ea) =>

{

var body = ea.Body.ToArray();

Process(body);

// Missing: channel.BasicAck(ea.DeliveryTag, false);

};

// Good: Always acknowledge

var goodConsumer = new EventingBasicConsumer(channel);

goodConsumer.Received += (model, ea) =>

{

try

{

var body = ea.Body.ToArray();

Process(body);

channel.BasicAck(deliveryTag: ea.DeliveryTag, multiple: false);

}

catch (Exception)

{

channel.BasicNack(deliveryTag: ea.DeliveryTag, multiple: false, requeue: true);

}

};

Decision Matrix

Use this matrix to help choose between Kafka and RabbitMQ:

| Requirement | Kafka | RabbitMQ |

|---|---|---|

| Throughput > 100K msgs/sec | yes | no |

| Message Replay | yes | no |

| Event Sourcing | yes | no |

| Stream Processing | yes | no |

| Complex Routing | no | yes |

| RPC Patterns | no | yes |

| Low Latency (< 10ms) | no | yes |

| Message Priority | no | yes |

| Ease of Setup | no | yes |

| Operational Simplicity | no | yes |

| Long-term Storage | yes | no |

| Multiple Consumers (same data) | yes | Limited |

| Exactly-Once Semantics | yes | Limited |

| Protocol Flexibility | no | yes |

Conclusion

Kafka and RabbitMQ are both excellent messaging solutions, but they serve different purposes:

Choose Kafka when you need:

- High-throughput event streaming

- Message replay and historical data access

- Stream processing capabilities

- Event sourcing architecture

- Data pipeline integration

- Multiple consumers reading the same data

Choose RabbitMQ when you need:

- Task distribution and worker queues

- Complex message routing

- RPC-style communication

- Lower latency for individual messages

- Simpler operational requirements

- Protocol flexibility

Use Both when:

- You have diverse messaging needs

- High-volume events + task queues

- Event streaming + RPC patterns

- Large-scale systems with varied requirements

The key is understanding your specific requirements: throughput, latency, message patterns, operational capabilities, and team expertise. Many organizations successfully use both technologies in a complementary manner, leveraging the strengths of each for different use cases.

Further Reading

- Apache Kafka Documentation

- RabbitMQ Documentation

- Confluent Platform

- CloudAMQP RabbitMQ Guide

- Designing Data-Intensive Applications by Martin Kleppmann

Frequently Asked Questions

Can Kafka replace RabbitMQ entirely?

Not necessarily. While Kafka can handle many use cases, RabbitMQ excels at complex routing, RPC patterns, and task queues. The best choice depends on your specific requirements.

Is Kafka faster than RabbitMQ?

Kafka has higher throughput (millions of messages/sec vs. tens of thousands), but RabbitMQ typically has lower latency for individual message delivery. "Faster" depends on your metric.

Can I use Kafka for task queues?

Yes, but it's not ideal. Kafka doesn't have native task acknowledgment or requeuing. RabbitMQ is better suited for traditional task queue patterns.

How much does it cost to run Kafka vs RabbitMQ?

Kafka generally requires more infrastructure (CPU, disk, memory) due to its distributed nature and storage requirements. RabbitMQ can run on smaller instances but may need more nodes for high availability.

Which one is easier to learn?

RabbitMQ has a gentler learning curve, especially for developers familiar with traditional message queues. Kafka requires understanding distributed systems concepts like partitions, consumer groups, and offset management.

🤝 Need a Custom RSVP System or Dashboard?

I help businesses build tools that actually work , even on tight deadlines.

Whether you're planning an event, need internal tools, or want a custom dashboard for your team , I can help.

Reach out

📧 Email: safi.abdulkader@gmail.com | 💻 LinkedIn: @abdulkader-safi | 📱 Instagram: @abdulkader.safi | 🏢 DSRPT

Drop me a line, I’m always happy to collaborate! 🚀