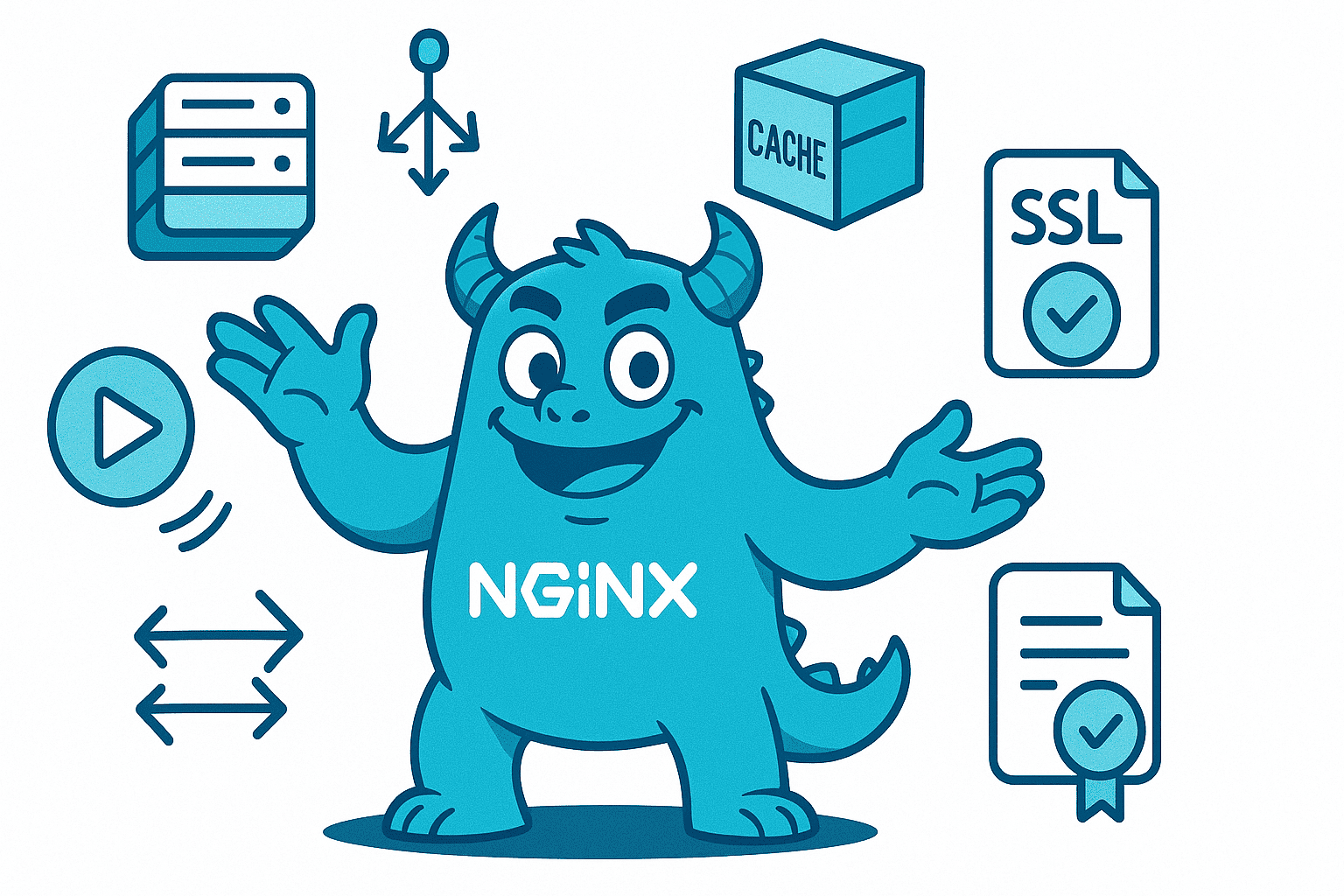

Introduction to Nginx

Nginx is one of the most powerful and versatile web servers powering the modern internet. While many developers know it as a basic web server, Nginx capabilities extend far beyond simple HTML delivery. This comprehensive guide explores everything Nginx can do, from reverse proxying to load balancing, caching, compression, and much more.

According to recent surveys from April 2025, Nginx powers over 33 percent of all web servers on the internet. To put this in perspective, Cloudflare ranks third on this list, while Node.js comes in fifth and serves only a fraction of what Nginx handles. Docker research revealed that Nginx is by far the most commonly deployed technology in Docker containers. Since 2012, it has been a core member of OpenBSD.

The performance numbers are staggering. Nginx can serve over 10,000 simultaneous connections with an extremely low memory footprint. This article will take you from simple configurations to advanced setups, revealing capabilities you may never have known existed.

The Origins and Evolution of Nginx

Nginx was born in 2002, created by Igor Sysoev to solve a critical problem known as the C10K challenge. This challenge referred to the difficulty of handling 10,000 concurrent requests per second, which was a major technical hurdle in 1999. Nginx was specifically designed to overcome this limitation.

The project quickly outgrew its original purpose. By 2011, Igor and his partner Maxim founded Nginx Inc., raising capital to provide commercial support for the open source project. In 2019, F5 Networks acquired Nginx for 670 million dollars, recognizing its strategic importance in modern web infrastructure.

A year after the acquisition, both founders left F5 amid some controversy. Maxim created a fork called Free Nginx, criticizing F5's management of the project. In 2022, other members of the core team created their own fork, which is actively maintained and serves as a drop-in replacement for the original.

Getting Started with Nginx Installation and Configuration

Installing Nginx is straightforward on most operating systems. Once installed, the Nginx configuration file typically lives under the /etc directory or nested under a path such as Homebrew on macOS.

The main configuration file, nginx.conf, contains different modules controlled by directives. For a simple web server, you need at minimum two directives:

The event directive handles connection processing, even if left empty with default settings. The http directive is where most web server behavior is configured. Starting with an empty event directive is enough to launch Nginx with all default settings.

Basic Server Configuration

Here is a simple example configuration. First, you set a different port from the default 80, which is always a good practice for local development. Then you define the server name and add a location path. Using /temp as an example, you can add a common try_files instruction that returns a 404 response if the requested URI or URI/index does not exist.

This fallback system lets you precisely configure behavior. If nothing exists at /temp, you get the expected 404 page from the server. Add an index file and refreshing the browser serves that file. This is the foundation of Nginx configuration.

Reverse Proxy: The Killer Feature

One of the most powerful features of Nginx is its ability to act as a reverse proxy. This functionality intercepts client requests and forwards them to backend servers, then returns responses as if Nginx handled them directly.

Imagine you have an Nginx server running and routing traffic. You want to serve some requests directly but proxy others to your backend API or another service. This is where proxy_pass comes in, making Nginx one of the best reverse proxy solutions available.

Configuring Reverse Proxy

You can proxy all requests going to a specific path like /secret and forward them to another server listening on a different port. For example, the first server block listens on port 80 serving a welcome screen, while /secret is a path that leads to another backend listening on port 8080.

When you run Nginx and call port 80, you get the welcome page. Navigate to the /secret path and you are served content from the backend server. While this is a simplified example, the power of this function becomes clear when you realize you can call external scripts, processes, or services and configure as many paths as needed, controlling how they are served and manipulating responses.

To prove this works, you can use netcat to listen on the proxied port. When you call /secret, you see the incoming request with all HTTP headers. This demonstrates the complete proxy chain.

Monitoring with Logs

When running production services, monitoring incoming traffic and debugging issues is critical. Nginx provides error_log and access_log directives for this purpose. Make sure these logs write to a path you know and can access.

Pro tip: when replacing text that includes paths, use tildes as separators to simplify your work. Once configured, you can tail both error and access logs to monitor activity in real time. Each access entry shows the IP address, timestamp, HTTP status code, and user agent. Error logs register issues like connection refused errors.

Caching: Turn Nginx into a CDN

Beyond proxying, Nginx offers powerful caching capabilities on multiple levels. Instead of hitting backend servers repeatedly, you can serve cached content from disk. This works great for static content, but Nginx can also cache dynamic responses.

By caching sub-millisecond responses, you can handle thousands of requests while reducing client latency and protecting backend services. In this way, Nginx functions as a small content delivery network.

Cache Configuration

The proxy_cache directive enables caching on a specific path and uses a name like my_cache to define the scope, which must be defined in the http block. The proxy_cache_valid line sets how long to cache upstream responses. For example, you can cache responses with HTTP status 200 or 302 for 10 minutes instead of using default lifetime settings.

Additional options include adding cache status headers to pass information to clients. You can configure Nginx to send requests to any endpoint. Using a service like httpbin to get dummy responses, you can test the caching mechanism.

When you make the first request using curl with the -I flag to get headers only, the cache status header shows MISS because this is the first request. Make the same request again and the status shows HIT. Subsequent requests continue showing HIT, meaning all responses come from cache. According to the configuration, cached content stays for 10 minutes before refreshing.

Compression: Reduce Latency and Cost

Large HTTP responses hurt everything: latency, network performance, and infrastructure costs. Compression is the natural solution, and Nginx excels at this.

GZIP Compression Setup

Enable GZIP compression and apply it to all backends or select specific ones. Choose a compression level between 1 and 9, with 6 being the sweet spot balancing compression ratio and CPU usage. Decide which content types to compress and set the minimum response size threshold for compression.

When calling a large JSON endpoint with the gzip header, the response returns with gzip under the content-encoding header. The same request without this header returns uncompressed content. The size difference is dramatic. A compressed version might be 400 bytes while the uncompressed version is more than 30 times larger.

This demonstrates how Nginx can proxy, serve, cache, and compress content all within a single configuration.

Load Balancing: Distributing Traffic Efficiently

Nginx is renowned in DevOps and Kubernetes communities as one of the best load balancers available. The Nginx documentation shows load balancing support for every communication protocol you can imagine.

Upstream Configuration

Starting with a simple HTTP upstream configuration, you can define multiple backend servers. Nginx interprets everything inside the upstream block as a backend server and uses round-robin distribution by default to spread incoming requests across all servers.

While round-robin is the default method, you can choose several other algorithms:

Least connections sends traffic to the server with the fewest active connections. IP hash creates sticky sessions by consistently routing the same client IP to the same backend server. Random selection picks a backend server randomly for each request.

Testing Load Balancing Locally

You can test this locally with two backends on ports 8181 and 8182. Incoming traffic on port 80 gets distributed between them. Using netcat to listen on both ports, you can observe how requests alternate between the two backends.

Each backend server can include health check configurations with maximum attempts and timeout settings. You can also assign weight values to influence routing decisions, sending more traffic to more powerful servers.

Performance Testing: The C10K Challenge

Does Nginx live up to its reputation? A load test using k6, a modern load testing tool, can verify the claims. A simple k6 script targeting the original C10K problem aims for 10,000 requests per second. Running this test for 60 seconds against a locally served Nginx instance on port 8080 provides real performance data.

With a lean configuration containing just an index file, k6 loads the system. The results are impressive. There are typically zero errors, though some test runs might show a few failures, never exceeding 0.1 percent at this request rate.

Final results show over half a million requests with an average of 10,000 requests per second. Nginx truly is a high-performance beast.

API Gateway Functionality

Nginx capabilities extend into API gateway territory. Beyond manipulating requests, Nginx can implement request limiting and throttling. Define a zone with the number of requests allowed per unit of time, set the rate, and you have basic rate limiting configured.

Every load balancer with API gateway functionality needs SSL termination, and Nginx handles this excellently. Configure Nginx to listen on port 443 for HTTPS requests, specify where certificates are stored, define supported protocols and cipher suites, and let Nginx handle the rest.

SSL Termination Example

Creating a local certificate and building a small Python backend demonstrates SSL termination. The backend reads unencrypted requests after they pass through the load balancer on port 443. This serves as a sanity check.

With paths configured and Nginx started, the backend serves requests. Nginx validates that configuration file syntax and functionality are correct before starting. Accessing https://localhost triggers a browser warning because the self-signed certificate is not trusted by default. However, the connection works and the backend receives the unencrypted request. SSL termination works successfully.

Media Streaming Capabilities

Nginx includes a module for streaming media content. You can configure movie buffer sizes, limit streaming rates, and stream from specific points in media files because media is always indexed. This makes Nginx suitable for video streaming platforms and media delivery services.

Container Deployment: The Modern Standard

The most common way to deploy Nginx in production today is through containers. Docker research from 2018 found that Nginx is the most deployed technology by a large margin through Docker Hub.

Nginx has countless image variants on the official repository, plus many other excellent repositories for Nginx forks and variations from providers like Bitnami, Ubuntu, Rancher, VMware, Intel, and CircleCI.

Choosing the Right Image

You can run Nginx using the latest tag, or go smaller with nginx:alpine, or push even further with nginx:alpine-slim. These images start at 172 megabytes for latest, drop to 53 megabytes for Alpine, and shrink to an impressive 12 megabytes for Alpine Slim.

The latest image includes all common dependencies like OpenSSL and zlib, making it great for development and staging environments. Alpine contains only essential libraries in minimal versions, making it ideal for production deployments. The slim version removes everything non-essential, including man pages.

While the slim version is the safest option from a security perspective with the smallest attack surface, it can make debugging difficult if you are not used to stripped containers with no shell. You may need to add libraries you know are required.

Nginx UI: Visual Management

Nginx excels at performance but traditionally offers no visual interface. Nginx UI is an open source web interface for managing Nginx with over 10,000 GitHub stars.

The dashboard provides system metrics including CPU load, network traffic, disk I/O, served requests, per-connection metrics, running processes, and more. You can visually browse configured sites and backends, edit configuration files through the web interface, and view access logs conveniently from your browser.

While Grafana is popular for monitoring, Nginx UI offers specific features tailored to Nginx management that make it particularly useful.

Learning Resources: Nginx Playground

For developers experimenting with Nginx configurations or learning the system, the Nginx Playground is an excellent online utility created by Julia Evans, a talented engineer known for educational tech comics.

The playground lets you add your configuration, run it virtually, and read the output to understand how Nginx interprets your directives. This is invaluable for learning and testing configurations before deploying to production.

Conclusion: Master the Infrastructure Foundation

Nginx is one of the most important building blocks of modern web infrastructure. Its versatility extends far beyond basic web serving to include reverse proxying, caching, compression, load balancing, SSL termination, API gateway functionality, and media streaming.

Understanding Nginx deeply allows you to build robust, high-performance systems that can scale to handle millions of requests. Whether you are deploying a simple website or building complex microservices architecture, Nginx provides the tools you need.

The performance characteristics are proven through decades of production use across the largest websites on the internet. With proper configuration, Nginx can handle extreme loads while maintaining low latency and minimal resource consumption.

Start with simple configurations and gradually explore advanced features as your needs grow. The combination of power, flexibility, and reliability makes Nginx an essential tool for any developer or operations engineer working with web infrastructure.

🤝 Need a Custom RSVP System or Dashboard?

I help businesses build tools that actually work , even on tight deadlines.

Whether you're planning an event, need internal tools, or want a custom dashboard for your team , I can help.

Reach out

📧 Email: safi.abdulkader@gmail.com | 💻 LinkedIn: @abdulkader-safi | 📱 Instagram: @abdulkader.safi | 🏢 DSRPT

Drop me a line, I’m always happy to collaborate! 🚀