Introduction: Solving the Image Organization Problem

Have you ever scrolled through a folder full of cryptically named images like IMG_0001.jpg, DSC_1234.jpg, or Screenshot_20231015.png? I certainly have, and it's frustrating trying to find that one specific photo when all you have to go by is a generic filename.

That's why I built an AI-powered image renaming application that analyzes your images and automatically generates descriptive, human-readable filenames using computer vision. Instead of IMG_0001.jpg, you get sunset_beach.jpg. Instead of DSC_1234.jpg, you get red_sports_car.jpg. The AI actually "sees" what's in your images and creates names that make sense.

In this comprehensive tutorial, I'll walk you through building a Python desktop application using tkinter, ttkbootstrap, and Ollama's local AI models. You'll learn:

- How to build modern Python GUI applications with tkinter and ttkbootstrap

- Choosing the right AI vision model (LLaVA, Mistral, Llama) for image recognition

- Implementing computer vision with local AI models using Ollama

- Threading patterns for responsive desktop applications

- Prompt engineering for vision AI models

- Performance optimization for AI-powered applications

The Tech Stack for AI Image Recognition

Before diving into the details, let me introduce the core technologies I used to build this Python desktop application:

- Python 3.8+: The foundation of the application

- tkinter: Python's standard GUI library for desktop applications

- ttkbootstrap: A modern theme package that makes tkinter look sleek and professional

- Ollama: A local AI runtime that allows you to run vision models on your own machine

- Pillow (PIL): For image processing and manipulation

Why Build a Python Desktop Application for Image Processing?

You might be wondering: "Why not a web app?" That's a fair question. Here's why I chose the desktop application route for this AI image tool:

- Privacy: All processing happens locally on your machine. Your images never leave your computer.

- Speed: No network latency or API rate limits. Everything runs as fast as your hardware allows.

- Cost: No cloud API fees. Once you have the models downloaded, everything is free.

- Offline Capability: Works without an internet connection after initial setup.

The Critical Lesson: Choosing the Right AI Vision Model

Here's where things got interesting. When I first started this AI image recognition project, I naively assumed that any AI model from Ollama would work for image analysis. I began with Mistral, a popular language model.

The Mistral Problem: Understanding Vision vs Text-Only Models

I wrote all my code, connected to Ollama, and ran my first test. The result? Filenames like:

im_sorry_but_i_cant_process_images.jpg

i_dont_have_vision_capabilities.jpg

as_an_ai_language_model_i_cannot.jpg

Frustrating, right? I spent hours debugging before I realized the fundamental issue: Mistral is a text-only language model. It has no vision capabilities whatsoever. It's like asking someone to describe a painting over the phone, they simply don't have the ability to see it.

This was my first major lesson: Not all AI models are created equal. You need a vision model (also called a multimodal model) for image processing tasks.

Discovering LLaVA and Multimodal Vision Models

After research and some trial and error, I discovered LLaVA (Large Language and Vision Assistant) - a multimodal AI model that revolutionized my project. LLaVA is specifically designed to process both images and text, making it perfect for computer vision tasks in Python applications.

Here are the best vision AI models I tested and recommend for image recognition:

| Model | VRAM Required | Speed | Quality | Best For |

|---|---|---|---|---|

| llava:7b | 4-6 GB | Fast | Good | General everyday use |

| llama3.2-vision | 8 GB | Medium | Excellent | Recommended balance |

| llava:13b | 10 GB | Medium | Very Good | High-quality results |

| llava:34b | 20 GB | Slow | Excellent | Maximum accuracy |

After testing multiple models, I settled on LLaVA as the default for its excellent balance of speed and accuracy.

Key Takeaways for AI Model Selection in Computer Vision

- Check Model Capabilities: Always verify that a model supports the modality you need (text, vision, audio, etc.). Not all AI models can process images.

- Consider Hardware Requirements: Vision AI models are memory-intensive. Make sure your GPU has enough VRAM for the model size.

- Balance Speed vs Quality: Larger models provide better image recognition accuracy but slower inference. Choose based on your use case.

- Test Before Committing: Run benchmarks with your specific use case before settling on a vision model for production.

Building the Application: Python Desktop App Architecture

The Python desktop application follows a clean, modular architecture following best practices for GUI development:

ImageRenamingAI/

├── main.py # Application entry point

├── models/

│ ├── ai_service.py # Ollama AI integration

│ └── config.py # Configuration constants

├── ui/

│ ├── main_window.py # Main application window

│ └── image_viewer.py # Image display component

└── utils/

└── file_handler.py # File operations

The AI Service Layer: Implementing Computer Vision with Ollama

The heart of this AI-powered application is the OllamaService class that handles image recognition and processing. Here's how it works:

class OllamaService:

def generate_title(self, image_path):

# 1. Encode image to base64

image_base64 = self._encode_image(image_path)

# 2. Create a detailed prompt

prompt = (

f"Analyze this image and provide ONLY a very short descriptive title "

f"(maximum {self.max_title_length} characters). "

f"Be concise, use lowercase with underscores instead of spaces. "

f"Examples: 'sunset_beach', 'red_car_highway', 'cat_sleeping'. "

)

# 3. Send to Ollama vision model

response = chat(

model=self.model_name,

messages=[{

"role": "user",

"content": prompt,

"images": [image_base64],

}]

)

# 4. Clean and return the title

return self._sanitize_title(response["message"]["content"])

Prompt engineering for vision AI models is crucial for getting quality results. I learned to be very specific:

- Request lowercase with underscores (filesystem-friendly)

- Provide clear examples of expected output format

- Explicitly limit the length for better performance

- Instruct it to respond with ONLY the title (no explanations or commentary)

Detecting Non-Vision Models

One feature I'm particularly proud of is the automatic detection of non-vision models. The code checks for common error responses:

error_indicators = [

"sorry", "can't", "cannot", "unable",

"don't have", "no image", "as an ai", "language model"

]

if any(indicator in title.lower() for indicator in error_indicators):

raise Exception(

f"Model '{self.model_name}' appears to not support vision. "

f"Please use a vision-capable model like llama3.2-vision, llava, etc."

)

This prevents the frustrating experience I had initially and gives users clear guidance.

Building the UI with tkinter and ttkbootstrap: Modern Python GUI Development

Why ttkbootstrap for Python Desktop Applications?

Standard tkinter looks dated, there's no sugarcoating it. The default widgets have that classic Windows 95 aesthetic that screams "I'm a Python GUI."

Enter ttkbootstrap - a game-changer for Python GUI development. This amazing library provides modern, Bootstrap-inspired themes for tkinter applications. With a single line of code, you can transform your Python desktop app from dated to contemporary:

import ttkbootstrap as ttk

root = ttk.Window(themename="cyborg") # That's it!

I chose the "cyborg" theme for its sleek dark aesthetic, but ttkbootstrap offers many options: darkly, solar, superhero, flatly, cosmo, and more.

Key UI Patterns for Python Desktop Applications

1. Threading for Responsive GUI Applications

One of the biggest challenges with Python desktop apps is keeping the UI responsive during long-running operations like AI image processing. If you process images on the main thread, the entire application freezes.

Solution: Use Python's threading module to run AI operations in the background:

def start_ai_rename(self):

# Start processing in a separate thread

thread = threading.Thread(

target=self._process_images,

args=(selected_model,),

daemon=True

)

thread.start()

2. Thread-Safe UI Updates in tkinter Applications

But there's a catch: tkinter is NOT thread-safe. You can't update GUI elements directly from a background thread. The solution for Python GUI applications is root.after():

# Wrong (from background thread):

self.status_label.config(text="Processing...")

# Right (from background thread):

self.root.after(

0,

lambda: self.status_label.config(text="Processing...")

)

The after() method schedules the UI update to run on the main thread, avoiding race conditions and crashes.

3. Real-Time Progress Updates

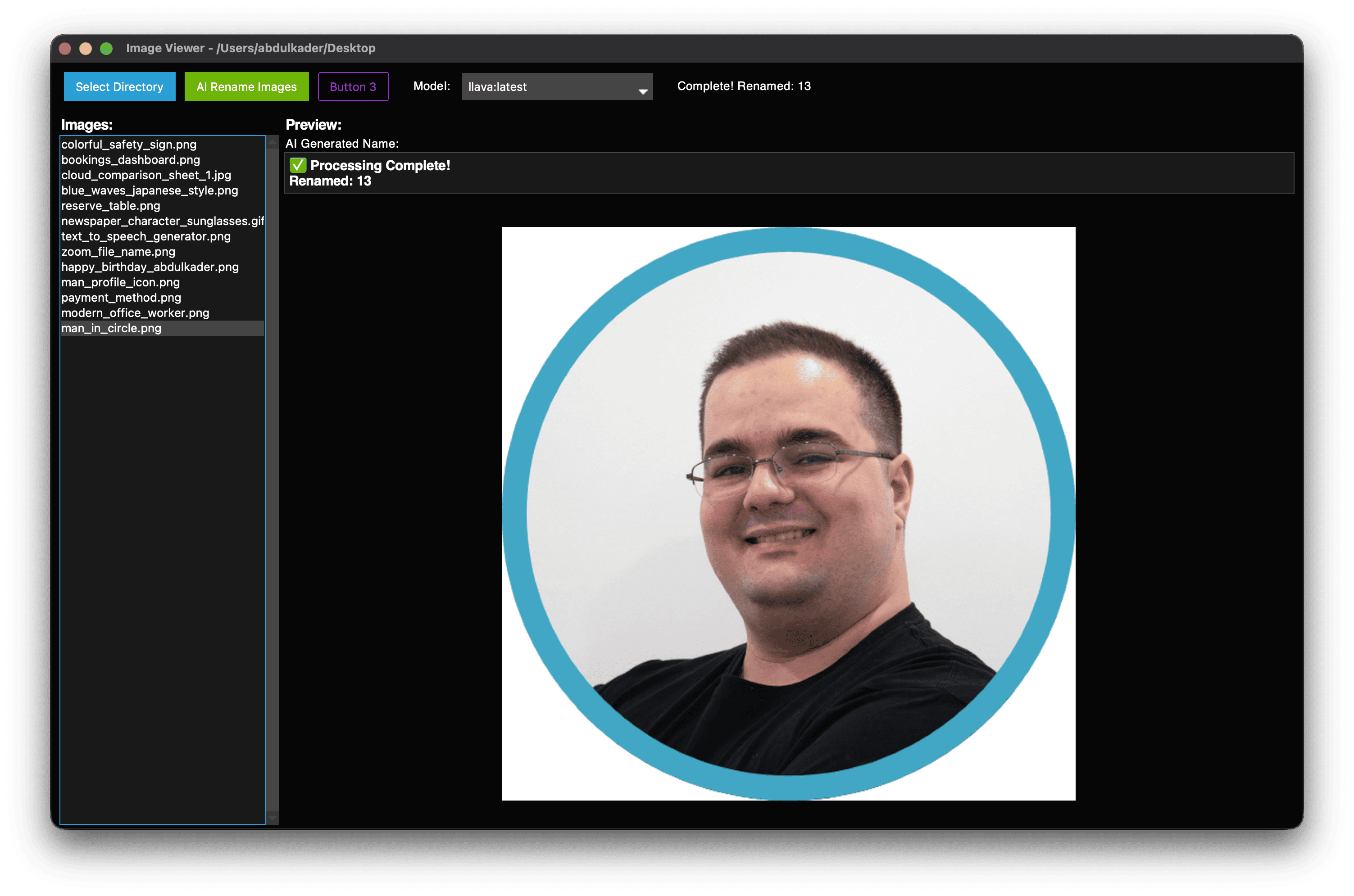

I wanted users to see exactly what's happening as images are processed. The application:

- Selects the current image in the listbox

- Displays the image in the preview pane

- Shows "Analyzing..." in the status box

- Displays the AI-generated name in green

- Updates the filename in the listbox immediately

- Moves to the next image

This gives fantastic visual feedback and makes the wait feel much shorter.

The Layout Structure

The UI is divided into clear sections:

The left panel shows all images, the right panel shows the preview and AI-generated name. It's clean, functional, and intuitive.

Challenges and Solutions

Challenge 1: Model Discovery

Users might have multiple Ollama models installed. How do we know which ones support vision?

Solution: I created a whitelist of known vision-capable model identifiers:

VISION_MODELS = [

"llama3.2-vision", "llama4", "llava", "bakllava",

"qwen2-vl", "qwen-vl", "mistral-small", "pixtral",

"cogvlm"

]

The app queries Ollama for installed models and filters them against this list.

Challenge 2: Filename Collisions

What happens when the AI generates the same name for multiple images?

Solution: The file handler automatically appends numbers:

sunset_beach.jpgsunset_beach_1.jpgsunset_beach_2.jpg

Challenge 3: Invalid Filenames

AI models can be creative, sometimes too creative. They might generate names with invalid characters.

Solution: Aggressive sanitization:

# Lowercase and replace spaces

title = title.lower().replace(" ", "_")

# Remove non-alphanumeric characters except underscores

title = "".join(c for c in title if c.isalnum() or c == "_")

# Truncate to max length

if len(title) > self.max_title_length:

title = title[:self.max_title_length]

Challenge 4: Empty or Useless Responses

Occasionally, the AI returns empty strings or single characters.

Solution: Validation and fallback:

if not title or len(title) < 3:

title = "unnamed_image"

Performance Considerations for Local AI Image Processing

Running AI vision models locally is resource-intensive. Here's what I learned about optimizing Python AI applications:

1. Image Encoding

Images need to be base64-encoded before sending to Ollama. For large images, this can be slow.

Optimization: Consider resizing very large images before processing. The AI doesn't need 4K resolution to identify content.

2. Model Loading

The first inference with a model is slow because it needs to load into memory. Subsequent inferences are much faster.

Strategy: Keep the same model loaded throughout the batch operation.

3. Batch Processing

Processing hundreds of images takes time. The application provides clear progress indicators (e.g., "Processing 45/237...") so users know how long to wait.

What I Learned Building an AI Desktop Application

Technical Lessons from Python Desktop Development

- AI Model Selection is Critical: Don't assume all AI models can do everything. Vision models like LLaVA are required for image recognition tasks. Research capabilities thoroughly before committing.

- Threading is Essential for GUI Apps: For any long-running operation in a Python desktop application, use threads. Never block the main thread or your GUI will freeze.

- Prompt Engineering Matters for AI: The quality of computer vision AI output depends heavily on how you phrase your requests. Be specific and provide examples.

- UI Responsiveness is Non-Negotiable: Users will tolerate slow AI processing, but not a frozen interface. Always use threading for background tasks.

- ttkbootstrap Transforms Python GUIs: Modern themes transform tkinter applications from dated to professional with minimal effort. Essential for production desktop apps.

Development Lessons

- Test with Real Data Early: I should have tested with actual images and vision models from day one, not text-only models.

- User Feedback is Crucial: Real-time status updates and progress indicators make waiting feel much shorter.

- Error Messages Should Guide: Don't just say "Error." Tell users what went wrong and how to fix it.

- Modular Architecture Pays Off: Separating concerns (UI, AI service, file handling) made debugging and iteration much easier.

Future Improvements

If I continue developing this project, here's what I'd add:

- Undo Functionality: Allow users to revert renames if they don't like the results.

- Custom Naming Templates: Let users specify patterns like {date}_{ai_name} or {category}_{description}.

- Batch History Log: Keep a record of all rename operations for auditing.

- Image Categorization: Sort images into folders based on content (people, landscapes, food, etc.).

- Cloud Model Support: Add optional support for cloud APIs (OpenAI Vision, Anthropic Claude) for users without powerful GPUs.

- Duplicate Detection: Identify and flag potential duplicate images.

How to Get Started: Building Your Own AI Image Recognition App

If you want to build this Python AI desktop application yourself, here's the quickest path to get started with Ollama and LLaVA:

-

Install Ollama

# macOS/Linux curl -fsSL https://ollama.com/install.sh | sh # Or download from ollama.com -

Install a Vision Model

ollama pull llava -

Clone and Run

git clone https://github.com/Abdulkader-Safi/ImageRenamingAI.git cd ImageRenamingAI python -m venv .venv source .venv/bin/activate # On Windows: .venv\Scripts\activate pip install -r requirements.txt python main.py

That's it! You're ready to rename images with AI.

Conclusion: Building AI-Powered Desktop Applications with Python

Building this AI image renaming application taught me valuable lessons about AI model selection, Python desktop application development, and the importance of user experience in GUI applications. The biggest takeaway? Always verify that your chosen AI model supports the specific task you need. Vision models like LLaVA, Llama3.2-vision, and other multimodal models are essential for image recognition tasks, while text-only models like Mistral won't work.

The combination of tkinter for structure, ttkbootstrap for modern aesthetics, and Ollama for local AI proved to be powerful and practical. The result is a fast, private, and genuinely useful Python desktop tool for organizing image collections using computer vision.

Whether you're building AI applications, desktop software, or both, I hope my experiences and code can help you avoid some of the pitfalls I encountered. The full source code for this Python image recognition project is available on GitHub, and I welcome contributions and feedback.

Key Takeaways for Python Developers

- Vision AI models (LLaVA, Llama3.2-vision) are required for image processing tasks

- ttkbootstrap makes modern Python GUI development accessible and professional

- Threading is essential for responsive desktop applications

- Local AI with Ollama provides privacy, speed, and cost benefits

- Prompt engineering significantly impacts AI output quality

Key Resources

- Project Repository: ImageRenamingAI on GitHub

- Ollama: ollama.com

- ttkbootstrap: ttkbootstrap.readthedocs.io

- LLaVA Model: Ollama LLaVA

- My Website: abdulkadersafi.com

Final Thoughts: The Future of AI Desktop Applications

The future of Python desktop applications is bright, especially with the rise of local AI models. Tools like Ollama make it possible to build powerful, privacy-respecting AI applications that run entirely on user hardware. No cloud dependencies, no API costs, no privacy concerns, just pure local computer vision power.

I encourage you to experiment, build, and share your own AI-powered desktop applications using Python, tkinter, and vision models. The barrier to entry has never been lower, and the possibilities for image recognition, computer vision, and AI-powered tools are endless.

Ready to build your own AI desktop app? Download the code, install Ollama, and start experimenting with LLaVA today!

Happy coding, and may your images always have meaningful names!

Frequently Asked Questions (FAQ)

What is the best AI model for image recognition in Python?

For local image recognition, LLaVA and Llama3.2-vision are excellent choices. They balance accuracy and performance for most use cases. For production applications requiring high accuracy, consider llava:13b or llava:34b models.

Can I use Mistral for image processing?

No, Mistral is a text-only model and cannot process images. You need a multimodal vision model like LLaVA, Llama3.2-vision, or other vision-capable models from Ollama.

Is tkinter good for modern Python desktop applications?

Yes, when combined with ttkbootstrap. While standard tkinter looks dated, ttkbootstrap provides modern, professional themes that make Python GUI applications competitive with other frameworks.

How much VRAM do I need for vision AI models?

It depends on the model size. LLaVA 7B needs 4-6GB, and Llama3.2-vision requires around 8GB. Larger models like llava:34b need 20GB+ VRAM.

Are there alternatives to Ollama for local AI?

Yes, alternatives include LocalAI, LM Studio, and llama.cpp. However, Ollama provides the best developer experience for Python applications with its simple API and model management.

How fast is local AI image processing compared to cloud APIs?

After the initial model load, local AI processing is typically faster than cloud APIs because there's no network latency. The first inference takes longer as the model loads into memory, but subsequent processing is very fast.

Can I use this for commercial projects?

Yes! The application uses open-source technologies and models. Check the specific licenses for Ollama, LLaVA, and other components. Most are permissive open-source licenses suitable for commercial use.

How accurate is the AI at generating image descriptions?

Accuracy depends on the model size. LLaVA 7B is good for general use (~80-85% accuracy), while larger models like llava:34b provide excellent accuracy (~90-95%). Results vary based on image complexity and clarity.

🤝 Need a Custom RSVP System or Dashboard?

I help businesses build tools that actually work , even on tight deadlines.

Whether you're planning an event, need internal tools, or want a custom dashboard for your team , I can help.

Reach out

📧 Email: safi.abdulkader@gmail.com | 💻 LinkedIn: @abdulkader-safi | 📱 Instagram: @abdulkader.safi | 🏢 DSRPT

Drop me a line, I’m always happy to collaborate! 🚀