Large Language Models (LLMs) have revolutionized how businesses operate, but their operational costs can quickly spiral out of control. According to industry reports, companies spend anywhere from $10,000 to over $1 million monthly on LLM APIs. However, with the right optimization strategies, organizations can reduce these costs by 50-90% without sacrificing quality.

This comprehensive guide explores battle-tested LLM cost optimization strategies, complete with real-world examples and implementation details that you can apply immediately.

Understanding LLM Pricing Models

Before optimizing costs, it's crucial to understand how LLM providers charge for their services:

Token-Based Pricing

- Input tokens: Text sent to the model (prompt)

- Output tokens: Text generated by the model (completion)

- Price variance: Output tokens typically cost 2-3x more than input tokens

Current Market Rates (2025)

GPT-4 Turbo: $10/1M input tokens, $30/1M output tokens

Claude 3.5 Sonnet: $3/1M input tokens, $15/1M output tokens

GPT-3.5 Turbo: $0.50/1M input tokens, $1.50/1M output tokens

Llama 3 (self-hosted): Infrastructure costs only

Key insight: A single application making 10 million API calls monthly with 1,000 token responses could cost $15,000-45,000 annually with GPT-4.

10 Proven Cost Optimization Strategies

1. Strategic Model Selection and Routing

Strategy: Use smaller, cheaper models for simple tasks and reserve expensive models for complex reasoning.

Implementation approach:

def route_to_appropriate_model(task_complexity, query):

"""

Route requests to cost-effective models based on complexity

"""

if task_complexity == "simple":

# Classification, simple Q&A, formatting

return call_gpt_35_turbo(query) # 10x cheaper

elif task_complexity == "medium":

# Summarization, basic analysis

return call_claude_haiku(query) # 5x cheaper

else:

# Complex reasoning, multi-step tasks

return call_gpt_4_turbo(query)

Real-world impact: Anthropic reported that implementing intelligent routing reduced their internal costs by 63% while maintaining 95% accuracy.

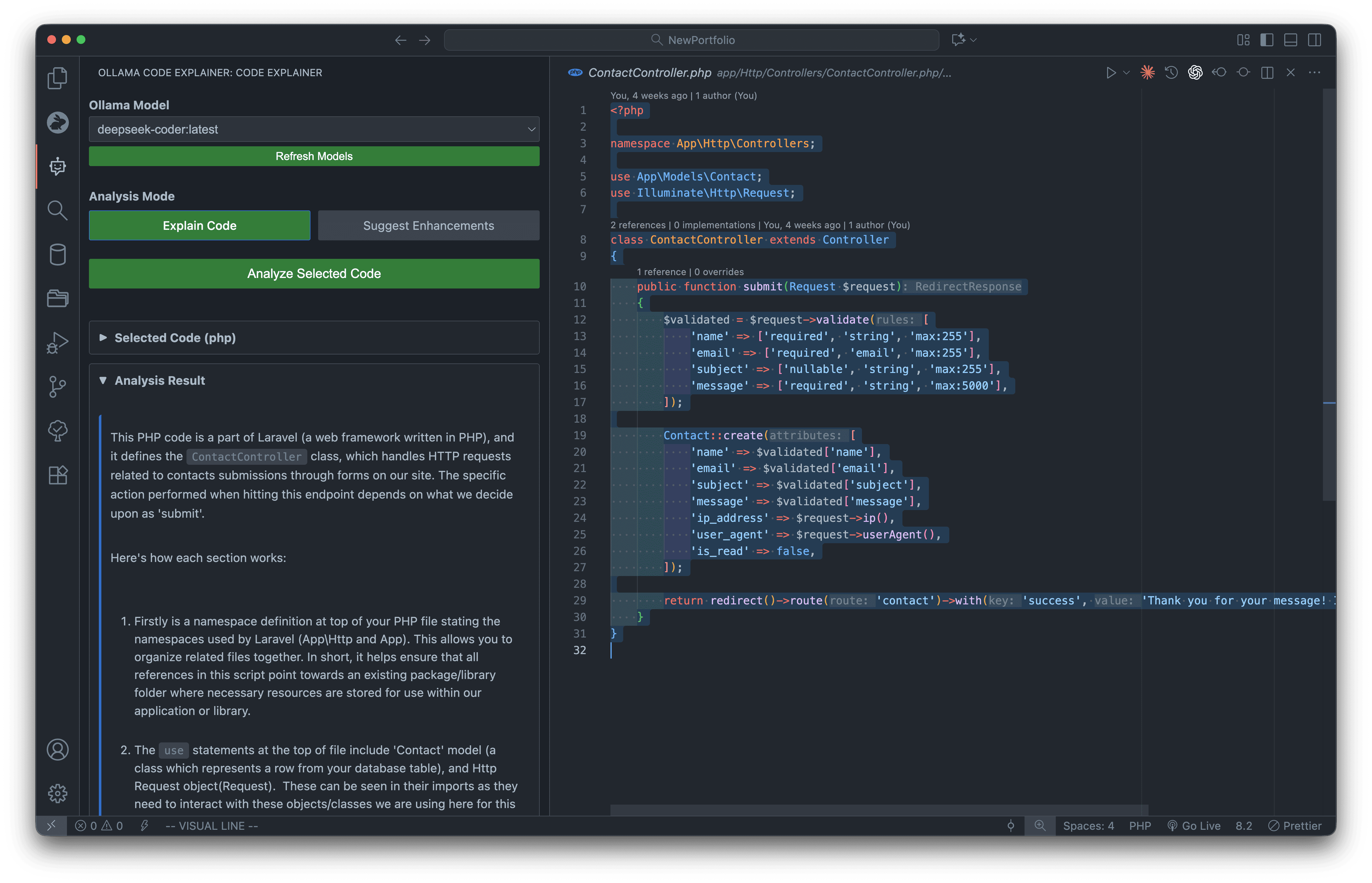

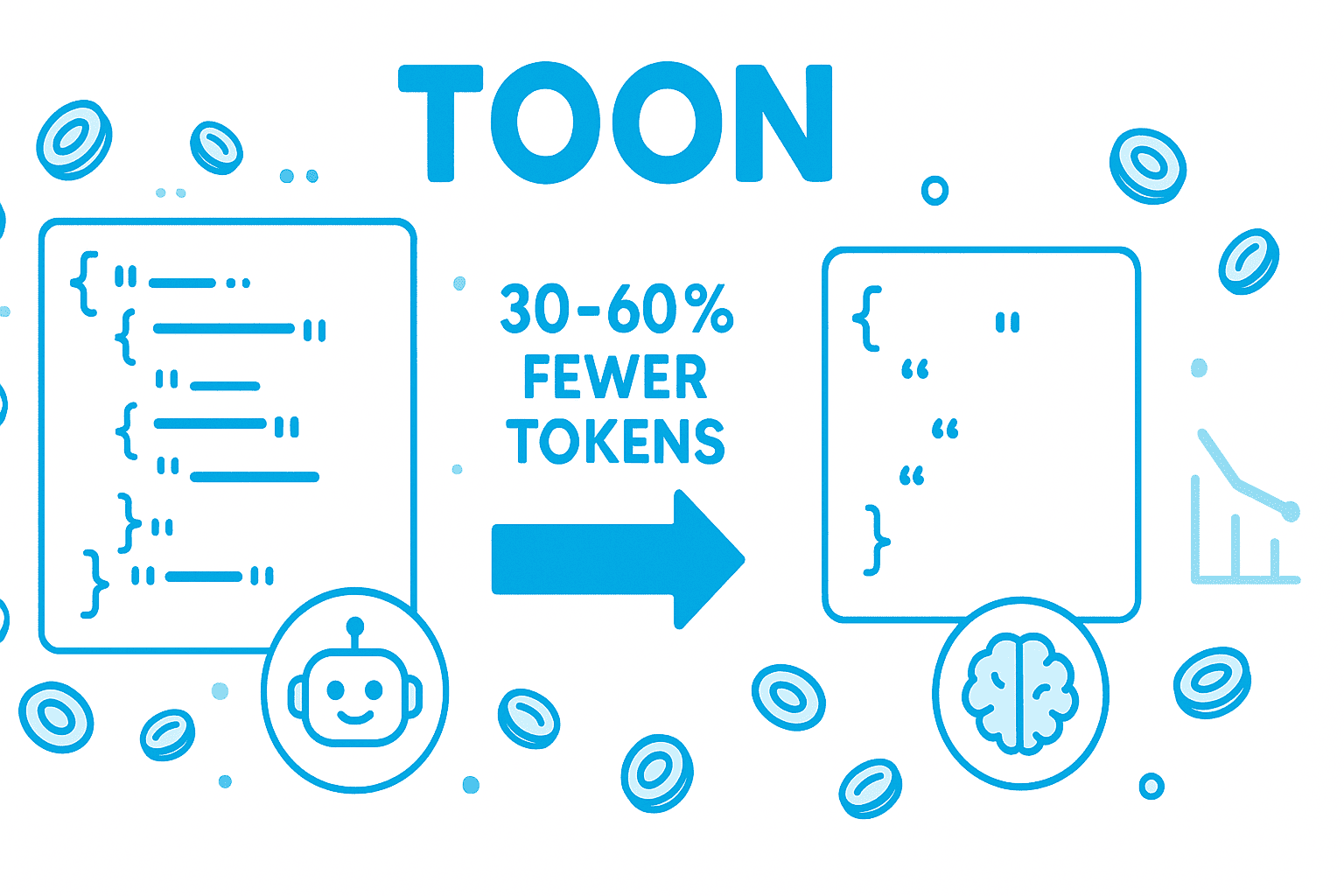

2. Aggressive Prompt Engineering

Strategy: Minimize token usage through concise, efficient prompts.

Before optimization:

Prompt: "I need you to carefully analyze the following customer feedback

and provide me with a detailed summary of the main points, including any

positive comments, negative comments, and suggestions for improvement.

Here is the feedback: [2000 tokens of feedback]"

Average tokens: 2,150 input + 800 output = 2,950 tokens

Cost per request (GPT-4): $0.053

After optimization:

Prompt: "Summarize customer feedback. Format: Positive | Negative |

Suggestions\n\n[2000 tokens of feedback]"

Average tokens: 2,020 input + 300 output = 2,320 tokens

Cost per request (GPT-4): $0.029

Savings: 45% cost reduction through prompt optimization alone.

Best practices:

- Remove pleasantries and verbose instructions

- Use structured output formats (JSON, bullet points)

- Provide examples instead of lengthy explanations

- Use system messages efficiently

3. Implement Semantic Caching

Strategy: Cache responses for similar queries to avoid redundant API calls.

Implementation:

from sentence_transformers import SentenceTransformer

import numpy as np

from functools import lru_cache

class SemanticCache:

def __init__(self, similarity_threshold=0.95):

self.model = SentenceTransformer('all-MiniLM-L6-v2')

self.cache = {}

self.threshold = similarity_threshold

def get_embedding(self, text):

return self.model.encode(text)

def check_cache(self, query):

query_embedding = self.get_embedding(query)

for cached_query, cached_response in self.cache.items():

cached_embedding = self.get_embedding(cached_query)

similarity = np.dot(query_embedding, cached_embedding)

if similarity >= self.threshold:

return cached_response

return None

def add_to_cache(self, query, response):

self.cache[query] = response

# Usage

cache = SemanticCache()

def get_llm_response(query):

# Check cache first

cached_response = cache.check_cache(query)

if cached_response:

return cached_response

# Call LLM if not cached

response = call_openai_api(query)

cache.add_to_cache(query, response)

return response

Real-world impact: E-commerce company Shopify reported 40% cache hit rate, saving $180,000 annually.

4. Response Streaming and Early Termination

Strategy: Stream responses and terminate generation when sufficient information is received.

Implementation:

def stream_with_early_termination(prompt, max_tokens=500, stop_conditions=None):

"""

Stream response and stop early when conditions are met

"""

response_text = ""

for chunk in openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": prompt}],

max_tokens=max_tokens,

stream=True

):

delta = chunk.choices[0].delta.get("content", "")

response_text += delta

# Early termination conditions

if stop_conditions and any(cond in response_text for cond in stop_conditions):

break

# Stop if complete answer detected

if response_text.strip().endswith((".", "!", "?")) and len(response_text) > 100:

break

return response_text

# Example: Classification task

result = stream_with_early_termination(

"Classify sentiment: 'This product is amazing!' Answer: ",

stop_conditions=["Positive", "Negative", "Neutral"]

)

Savings: Reduces output tokens by 30-50% for classification and extraction tasks.

5. Batch Processing

Strategy: Process multiple requests in a single API call to reduce overhead and costs.

Implementation:

def batch_process_queries(queries, batch_size=10):

"""

Process multiple queries in batched prompts

"""

results = []

for i in range(0, len(queries), batch_size):

batch = queries[i:i+batch_size]

# Create batched prompt

batched_prompt = "Process these queries and return JSON:\n\n"

for idx, query in enumerate(batch):

batched_prompt += f"{idx+1}. {query}\n"

batched_prompt += "\nReturn format: {\"1\": \"response\", \"2\": \"response\", ...}"

# Single API call for multiple queries

response = call_openai_api(batched_prompt)

parsed_results = parse_json_response(response)

results.extend(parsed_results.values())

return results

# Example usage

queries = [

"Translate to Spanish: Hello",

"Translate to Spanish: Goodbye",

"Translate to Spanish: Thank you",

# ... 100 more queries

]

# Instead of 103 API calls, makes only ~10 calls

results = batch_process_queries(queries)

Real-world impact: SaaS company reduced translation API costs from $2,300 to $400/month (83% reduction).

6. Fine-Tuning for Specialized Tasks

Strategy: Fine-tune smaller models for specific use cases instead of using large general-purpose models.

Cost comparison:

Option A: GPT-4 for customer support

- Cost per query: $0.015

- Monthly volume: 100,000 queries

- Monthly cost: $1,500

Option B: Fine-tuned GPT-3.5

- Fine-tuning cost: $200 (one-time)

- Cost per query: $0.002

- Monthly cost: $200

- Annual savings: $15,400

When to fine-tune:

- High-volume, repetitive tasks

- Domain-specific language or terminology

- Consistent output format required

- Task-specific accuracy improvement needed

Implementation steps:

- Collect 500+ high-quality examples

- Format training data in JSONL format

- Fine-tune model via API

- Test and iterate

- Deploy fine-tuned model

7. Implement Request Throttling and Quotas

Strategy: Prevent cost overruns through intelligent rate limiting.

Implementation:

from datetime import datetime, timedelta

import redis

class CostGuard:

def __init__(self, redis_client, daily_budget_usd=100):

self.redis = redis_client

self.daily_budget = daily_budget_usd

self.cost_per_1k_tokens = 0.002 # Average cost

def check_budget(self, estimated_tokens):

today = datetime.now().strftime("%Y-%m-%d")

key = f"llm_cost:{today}"

# Get current spend

current_spend = float(self.redis.get(key) or 0)

estimated_cost = (estimated_tokens / 1000) * self.cost_per_1k_tokens

if current_spend + estimated_cost > self.daily_budget:

raise BudgetExceededError(

f"Daily budget ${self.daily_budget} would be exceeded"

)

return True

def record_usage(self, actual_tokens):

today = datetime.now().strftime("%Y-%m-%d")

key = f"llm_cost:{today}"

cost = (actual_tokens / 1000) * self.cost_per_1k_tokens

self.redis.incrbyfloat(key, cost)

self.redis.expire(key, 86400 * 7) # Keep 7 days of data

# Usage

guard = CostGuard(redis_client, daily_budget_usd=500)

def safe_llm_call(prompt):

estimated_tokens = len(prompt.split()) * 1.3 # Rough estimate

if guard.check_budget(estimated_tokens):

response = call_openai_api(prompt)

guard.record_usage(response.usage.total_tokens)

return response

Real-world impact: Prevented $12,000 in unexpected charges due to a bug causing infinite loops in production.

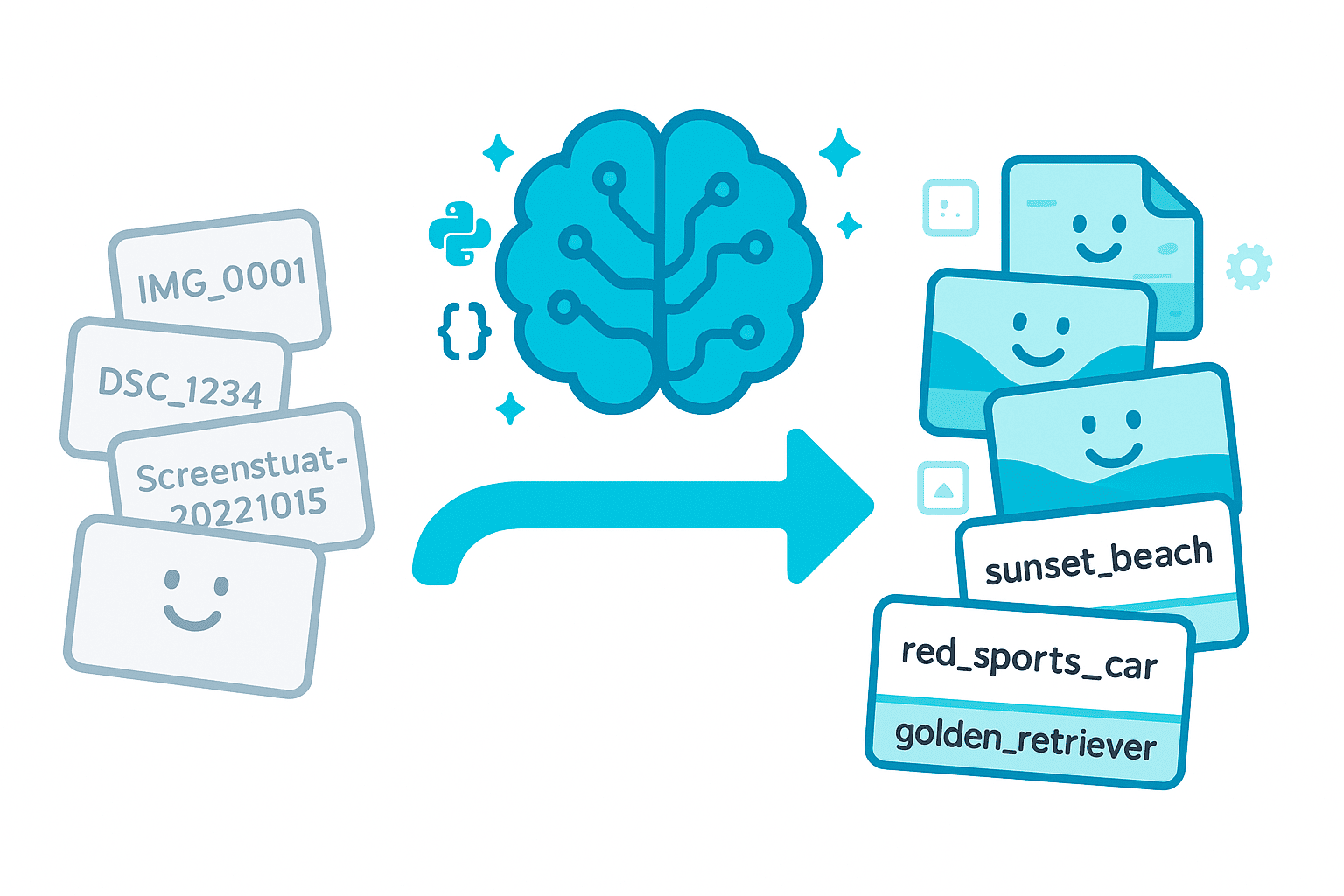

8. Leverage Open-Source and Self-Hosted Models

Strategy: Use open-source models for suitable use cases to eliminate per-token costs.

Cost comparison for 10M tokens/month:

OpenAI GPT-4 API: $100,000/month

Claude 3.5 Sonnet: $30,000/month

Self-hosted Llama 3 70B:

- GPU instances (4x A100): $10,000/month

- Maintenance: $2,000/month

- Total: $12,000/month

Annual savings: $1,056,000 vs GPT-4

Open-source options:

- Llama 3: Excellent general-purpose capabilities

- Mistral: Efficient for European languages

- Phi-3: Compact model for edge deployment

- Code Llama: Specialized for programming tasks

When self-hosting makes sense:

- Volume exceeds 50M tokens/month

- Data privacy requirements

- Low-latency requirements

- Custom fine-tuning needs

9. Output Length Limiting

Strategy: Constrain output token generation to only what's necessary.

Implementation:

def optimize_max_tokens(task_type):

"""

Set appropriate max_tokens based on task

"""

limits = {

"classification": 10,

"yes_no": 5,

"entity_extraction": 100,

"summarization": 150,

"short_answer": 50,

"translation": 200,

"code_generation": 500,

"long_form": 1000

}

return limits.get(task_type, 200)

# Example

response = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Is this spam: 'Buy now!'"}],

max_tokens=optimize_max_tokens("yes_no"), # Only 5 tokens

temperature=0

)

Impact: Reducing max_tokens from default (unlimited) to task-appropriate limits saves 40-60% on output costs.

10. Implement Multi-Tier Architecture

Strategy: Create a cascade of models from cheapest to most expensive.

Architecture:

class MultiTierLLM:

def __init__(self):

self.tiers = [

{"name": "cache", "cost": 0, "handler": self.check_cache},

{"name": "rule_based", "cost": 0, "handler": self.rule_based},

{"name": "small_model", "cost": 0.001, "handler": self.gpt_35},

{"name": "medium_model", "cost": 0.005, "handler": self.claude_sonnet},

{"name": "large_model", "cost": 0.015, "handler": self.gpt_4}

]

def process(self, query, confidence_threshold=0.8):

for tier in self.tiers:

result, confidence = tier["handler"](query)

if confidence >= confidence_threshold:

self.log_usage(tier["name"], tier["cost"])

return result

# Fallback to most powerful model

return self.gpt_4(query)

def rule_based(self, query):

# Simple pattern matching for common queries

if "hello" in query.lower():

return "Hello! How can I help?", 1.0

return None, 0.0

def gpt_35(self, query):

response = call_openai_api(query, model="gpt-3.5-turbo")

confidence = self.calculate_confidence(response)

return response, confidence

# Usage

llm = MultiTierLLM()

response = llm.process("What is 2+2?") # Likely handled by tier 2 or 3

Real-world impact: Fintech startup reduced average cost per query from $0.012 to $0.003 (75% reduction) using cascading architecture.

Real-World Case Study: TechDocs AI

Background

TechDocs AI, a documentation automation platform, was spending $45,000/month on LLM APIs with the following breakdown:

- 15M API calls/month

- Average 1,500 input tokens per call

- Average 800 output tokens per call

- Primary model: GPT-4

Challenge

The cost structure was unsustainable as they scaled from 100 to 1,000 customers. They needed to reduce costs by 70% without degrading quality.

Implementation Strategy

Phase 1: Quick Wins (Month 1)

- Prompt optimization: Reduced average prompt from 1,500 to 900 tokens

- Output limiting: Set max_tokens=400 for documentation tasks

- Semantic caching: Implemented with 35% hit rate

Results: $45,000 � $28,000 (38% reduction)

Phase 2: Architectural Changes (Months 2-3)

-

Model routing:

- Simple formatting: GPT-3.5 Turbo (60% of requests)

- Complex explanations: GPT-4 (30% of requests)

- Code generation: Fine-tuned GPT-3.5 (10% of requests)

-

Batch processing: Grouped similar documentation requests

-

Early termination: Implemented for straightforward queries

Results: $28,000 � $14,500 (68% total reduction)

Phase 3: Advanced Optimization (Months 4-6)

- Self-hosted Llama 3: Deployed for 40% of traffic

- Fine-tuning: Created specialized models for common documentation patterns

- Multi-tier cascade: Implemented intelligent routing

Final results: $45,000 � $11,000 (76% reduction)

Key Metrics After 6 Months

Metric Before After Change

Monthly cost $45,000 $11,000 -76%

Avg response time 2.3s 1.8s -22%

Customer satisfaction 4.2/5 4.4/5 +5%

Cache hit rate 0% 42% +42%

Cost per request $0.003 $0.0007 -77%

Lessons Learned

- Start with low-hanging fruit: Prompt optimization gave fastest ROI

- Monitor quality metrics: Some optimizations initially degraded quality

- Gradual rollout: A/B tested each change before full deployment

- Documentation is key: Maintained playbook for each optimization strategy

- Continuous monitoring: Built dashboards to track costs in real-time

Implementation Roadmap

Week 1-2: Assessment and Planning

- [ ] Audit current LLM usage and costs

- [ ] Identify high-volume use cases

- [ ] Establish baseline metrics

- [ ] Set cost reduction targets

Week 3-4: Quick Wins

- [ ] Optimize prompts (target 30-40% token reduction)

- [ ] Implement output length limits

- [ ] Add basic caching for exact matches

- [ ] Deploy cost monitoring dashboard

Month 2: Architectural Improvements

- [ ] Implement semantic caching

- [ ] Set up model routing infrastructure

- [ ] Add batch processing for suitable workflows

- [ ] Deploy throttling and budget controls

Month 3-4: Advanced Optimization

- [ ] Fine-tune models for high-volume tasks

- [ ] Evaluate self-hosting for specific use cases

- [ ] Implement multi-tier cascading

- [ ] Optimize streaming and early termination

Month 5-6: Scale and Refine

- [ ] Deploy self-hosted models (if applicable)

- [ ] A/B test optimization strategies

- [ ] Refine caching strategies

- [ ] Document and standardize best practices

Monitoring and Optimization

Essential Metrics to Track

class LLMMetrics:

def track_request(self, model, input_tokens, output_tokens, latency, cost):

metrics = {

"timestamp": datetime.now(),

"model": model,

"input_tokens": input_tokens,

"output_tokens": output_tokens,

"total_tokens": input_tokens + output_tokens,

"latency_ms": latency,

"cost_usd": cost,

"cost_per_1k_tokens": (cost / (input_tokens + output_tokens)) * 1000

}

# Log to monitoring system

self.log_to_datadog(metrics)

# Check for anomalies

if cost > self.cost_threshold:

self.alert_team(f"High cost request: ${cost}")

return metrics

Key Performance Indicators (KPIs)

- Cost per request: Track average and percentiles (p50, p95, p99)

- Token efficiency: Input/output ratio over time

- Cache hit rate: Percentage of requests served from cache

- Model distribution: Percentage of requests to each model

- Quality metrics: User satisfaction, accuracy scores

- Cost savings: Month-over-month reduction

Dashboard Visualization Example

========================================================

| LLM Cost Dashboard - December 2024 |

========================================================

| Total Monthly Cost: $11,234 (-68% vs last month) |

| Total Requests: 8.2M (+15% vs last month) |

| Avg Cost per Request: $0.00137 (-72% vs last month)|

========================================================

Cost by Model:

- GPT-3.5 Turbo: $3,456 (31%) [########..]

- Claude Sonnet: $2,234 (20%) [######....]

- GPT-4: $4,123 (37%) [#########.]

- Self-hosted: $1,421 (13%) [####......]

Cache Performance:

- Hit Rate: 43% [########..]

- Savings: $3,200

- Avg Latency: 45ms

========================================================

Tools and Libraries for Cost Optimization

1. LangChain

Provides built-in caching, prompt templates, and model routing capabilities.

from langchain.cache import InMemoryCache

from langchain.llms import OpenAI

langchain.llm_cache = InMemoryCache()

2. LiteLLM

Unified interface for 100+ LLMs with automatic fallbacks and load balancing.

import litellm

# Automatically route to cheapest available model

response = litellm.completion(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "Hello"}],

fallbacks=["claude-instant-1", "gpt-3.5-turbo"]

)

3. PromptLayer

Track, monitor, and version control your prompts with built-in cost analysis.

4. Helicone

Open-source LLM observability platform with cost tracking and caching.

5. Custom Token Counters

import tiktoken

def count_tokens(text, model="gpt-4"):

encoding = tiktoken.encoding_for_model(model)

return len(encoding.encode(text))

# Estimate cost before making request

input_tokens = count_tokens(prompt)

estimated_cost = (input_tokens / 1000) * 0.01

Common Pitfalls to Avoid

1. Over-Optimization

Mistake: Reducing quality too much to save costs Solution: Establish quality baselines and monitor satisfaction metrics

2. Premature Self-Hosting

Mistake: Self-hosting before reaching sufficient volume Solution: Only self-host when monthly API costs exceed $10,000

3. Ignoring Hidden Costs

Mistake: Focusing only on token costs while ignoring infrastructure Solution: Calculate total cost of ownership including engineering time

4. Cache Pollution

Mistake: Caching low-quality or outdated responses Solution: Implement cache invalidation and quality checks

5. No Monitoring

Mistake: Optimizing blind without measuring impact Solution: Set up comprehensive monitoring before optimizing

Future Trends in LLM Cost Optimization

1. Model Distillation

Training smaller, specialized models from larger ones for 10-100x cost reduction.

2. Edge Deployment

Running tiny models (<1B parameters) directly on devices for zero API costs.

3. Mixture of Experts (MoE)

Next-generation architecture that activates only necessary model components.

4. Speculative Decoding

Technique that can reduce inference costs by 2-3x with no quality loss.

5. Pricing Competition

Increased competition driving prices down 70-80% year-over-year.

Conclusion

LLM cost optimization is not a one-time task but an ongoing process of measurement, experimentation, and refinement. By implementing the strategies outlined in this guide, organizations can achieve 50-90% cost reductions while maintaining or even improving quality.

Key Takeaways

- Start with prompt engineering: Easiest wins with 30-50% savings

- Implement caching early: 40%+ cache hit rates are achievable

- Use the right model for the task: Don't use GPT-4 for simple classification

- Monitor continuously: You can't optimize what you don't measure

- Quality first: Never sacrifice user experience for cost savings

- Iterate gradually: Test and validate each optimization

Action Items

Ready to start optimizing? Follow these steps:

- This week: Audit current usage and optimize your top 3 prompts

- Next week: Implement basic caching and output limits

- This month: Set up model routing and monitoring

- Next quarter: Evaluate fine-tuning and self-hosting options

The combination of strategic thinking, technical implementation, and continuous monitoring will position your organization to leverage LLMs cost-effectively at scale.