In the rapidly evolving world of artificial intelligence and large language models (LLMs), two critical concepts have emerged as fundamental to effective AI interaction: prompt engineering and context engineering. While these terms are often used interchangeably, they represent distinct approaches to optimizing AI communication. Understanding the difference between these two methodologies can dramatically improve your AI outputs, save costs, and unlock more sophisticated use cases.

This comprehensive guide explores the nuances of prompt engineering versus context engineering, their applications, best practices, and real-world examples to help you master both techniques.

What is Prompt Engineering?

Prompt engineering is the art and science of crafting effective input instructions that guide AI models to produce desired outputs. It focuses on how you structure your immediate request or question to an AI system.

Key Characteristics of Prompt Engineering

- Message-level optimization: Focuses on individual queries

- Instruction clarity: Emphasizes clear, specific directions

- Short-term interaction: Typically single-turn or limited conversation depth

- Technique-based: Relies on patterns like few-shot learning, chain-of-thought, role-playing

Basic Prompt Engineering Example

Poor Prompt:

Write about dogs.

Improved Prompt:

Write a 300-word informative article about Golden Retrievers,

focusing on their temperament, exercise needs, and suitability

as family pets. Use a friendly, accessible tone for first-time

dog owners.

The improved prompt provides specificity, constraints, and clear expectations, resulting in more targeted output.

What is Context Engineering?

Context engineering is the strategic design and management of the broader information environment in which AI interactions occur. It encompasses the entire conversation history, system instructions, reference materials, and architectural decisions that influence AI behavior over time.

Key Characteristics of Context Engineering

- System-level optimization: Focuses on the entire interaction framework

- Persistent information: Manages conversation history and long-term context

- Multi-turn conversations: Optimizes for extended dialogues

- Architecture-based: Involves RAG (Retrieval-Augmented Generation), vector databases, context windows

Context Engineering Example

Instead of repeatedly providing background information in each prompt, context engineering involves:

System Context (persistent):

- User is a senior software engineer working in fintech

- Preferred programming languages: Python, Go

- Company compliance requirements: PCI-DSS, SOC 2

- Code style: Functional programming preferred, extensive documentation

User Query: "How should I implement user authentication?"

AI Response: [Tailored to fintech context, suggests compliant

solutions, uses Python/Go examples, includes security documentation]

Key Differences: Prompt Engineering vs Context Engineering

| Aspect | Prompt Engineering | Context Engineering |

|---|---|---|

| Scope | Individual messages | Entire conversation system |

| Duration | Single interaction | Persistent across sessions |

| Focus | What you ask | What the AI knows |

| Optimization | Message clarity | Information architecture |

| Complexity | Relatively simple | More sophisticated |

| Cost Impact | Per-message tokens | Context window management |

| Use Case | Quick tasks, one-offs | Complex workflows, applications |

Prompt Engineering Techniques with Examples

1. Zero-Shot Prompting

Asking the AI to perform a task without examples:

Translate the following English text to French:

"The quick brown fox jumps over the lazy dog."

2. Few-Shot Prompting

Providing examples to guide the AI:

Convert these product descriptions to marketing copy:

Example 1:

Input: "Blue cotton t-shirt, size M"

Output: "Stay cool and comfortable in our classic blue cotton tee,

perfectly tailored in medium for everyday wear!"

Example 2:

Input: "Leather wallet, brown, 6 card slots"

Output: "Organize in style with this sophisticated brown leather

wallet featuring 6 convenient card slots!"

Now convert:

Input: "Wireless headphones, noise-canceling, 30-hour battery"

Output:

3. Chain-of-Thought (CoT) Prompting

Encouraging step-by-step reasoning:

Solve this problem step by step:

A store has 3 shirts for $45 total. If I buy 5 shirts,

what discount percentage do I need to pay the same per-shirt

price as buying 3?

Let's approach this systematically:

1. First, calculate the per-shirt price when buying 3

2. Then, calculate the total for 5 shirts at that price

3. Finally, determine what percentage off makes these equal

Show your work for each step.

4. Role-Based Prompting

Assigning the AI a specific persona:

You are a senior cybersecurity consultant with 15 years of

experience in penetration testing and security architecture.

A client asks: "Should we implement a zero-trust security model?"

Provide your expert recommendation considering cost, implementation

complexity, and security benefits.

5. Constrained Output Prompting

Specifying exact format requirements:

Analyze the sentiment of these customer reviews and return

ONLY a JSON object with no additional commentary:

{

"review_id": "integer",

"sentiment": "positive|negative|neutral",

"confidence": "float between 0-1",

"key_themes": ["array", "of", "strings"]

}

Review: "The product arrived late but the quality exceeded

my expectations. Customer service was very helpful."

Context Engineering Techniques with Examples

1. System Prompt Design

Establishing persistent behavior:

# System-level context that persists across all interactions

SYSTEM_PROMPT = """

You are a Python code reviewer for a healthcare application.

Context:

- HIPAA compliance is mandatory

- Code must handle PHI (Protected Health Information) securely

- All database queries must use parameterized statements

- Error messages must never expose sensitive data

When reviewing code:

1. Check for HIPAA compliance issues first

2. Verify secure data handling

3. Assess code quality and maintainability

4. Suggest improvements with security in mind

"""

# User code review requests now automatically apply this context

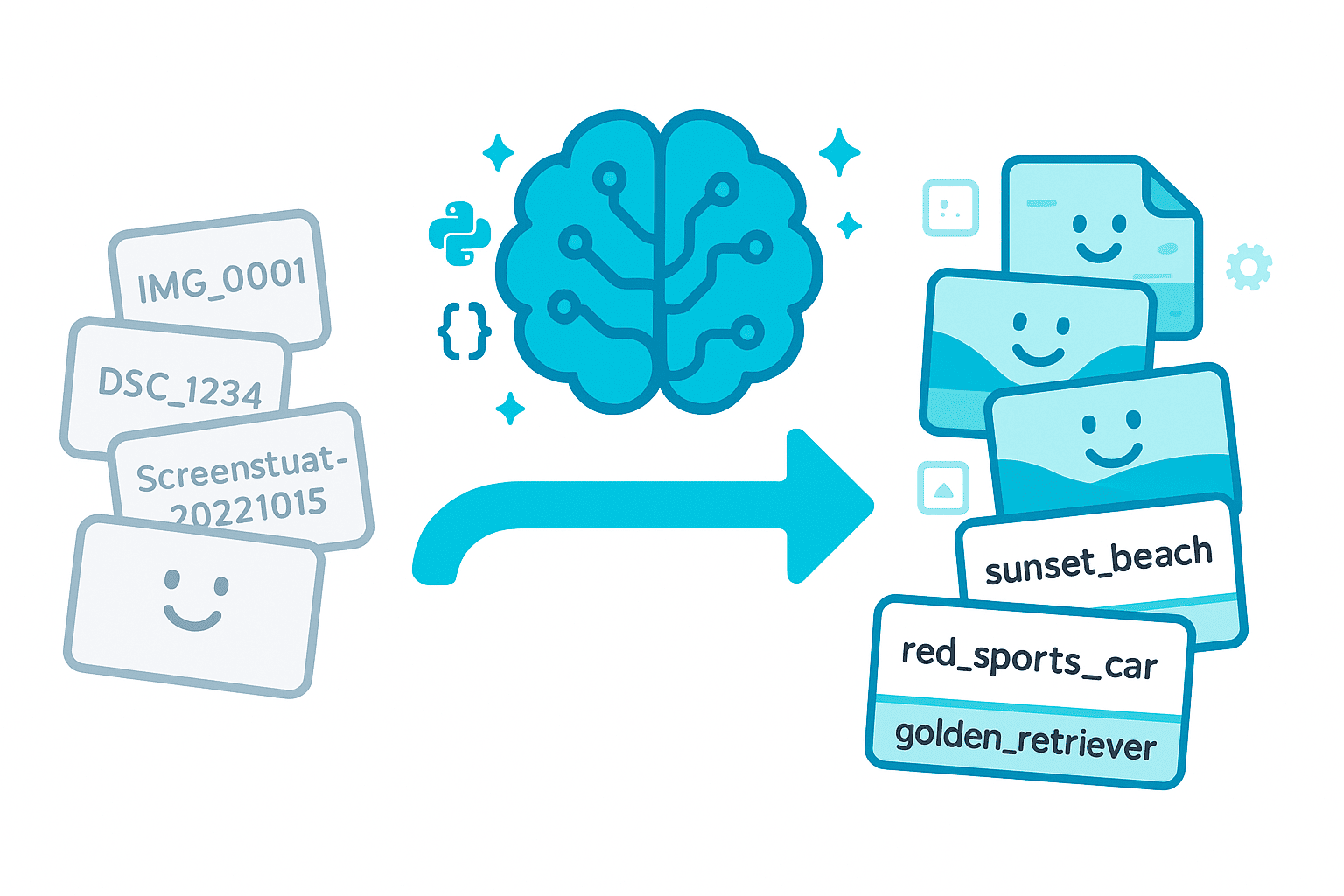

2. Retrieval-Augmented Generation (RAG)

Dynamically injecting relevant context:

# User asks: "What's our refund policy for defective products?"

# RAG system retrieves relevant documentation chunks:

retrieved_context = """

[From Company Policy Manual, Section 4.2]

Defective products can be returned within 90 days of purchase.

Full refunds are provided with proof of purchase.

Shipping costs for returns are covered by the company.

[From Legal Requirements, Section 2.1]

Consumer protection laws require refunds for faulty goods.

Customers cannot be charged restocking fees for defective items.

"""

# Combined prompt sent to AI:

final_prompt = f"""

Using the following context, answer the customer's question.

Context:

{retrieved_context}

Customer Question: What's our refund policy for defective products?

Provide a clear, customer-friendly response that is legally accurate.

"""

3. Conversation Memory Management

Maintaining relevant history:

# Intelligent context management

conversation_history = [

{"role": "user", "content": "I'm building an e-commerce site"},

{"role": "assistant", "content": "Great! What platform..."},

{"role": "user", "content": "Using React and Node.js"},

{"role": "assistant", "content": "Excellent choices..."},

# ... 50 more exchanges ...

{"role": "user", "content": "How do I handle payments?"}

]

# Context engineering: Summarize old context, keep recent exchanges

optimized_context = {

"summary": "User is building a React/Node.js e-commerce site.

Previously discussed: product catalog, user auth,

database schema. Tech stack: MongoDB, Express, Stripe.",

"recent_messages": conversation_history[-6:], # Last 3 exchanges

"current_focus": "Payment processing implementation"

}

4. Conditional Context Injection

Adding context based on triggers:

def build_context(user_query, user_profile):

base_context = "You are a helpful coding assistant."

# Inject security context when relevant

if any(keyword in user_query.lower()

for keyword in ['auth', 'password', 'security', 'token']):

base_context += """

SECURITY GUIDELINES:

- Never store passwords in plain text

- Always use bcrypt or Argon2 for hashing

- Implement rate limiting on auth endpoints

- Use JWT with short expiration times

- Enable 2FA when possible

"""

# Inject performance context for scale-related queries

if any(keyword in user_query.lower()

for keyword in ['scale', 'performance', 'optimize', 'slow']):

base_context += """

PERFORMANCE BEST PRACTICES:

- Use database indexing appropriately

- Implement caching strategies

- Consider CDN for static assets

- Use lazy loading and pagination

- Profile before optimizing

"""

return base_context

5. Multi-Document Context Orchestration

Managing multiple knowledge sources:

# User building a feature that touches multiple systems

user_query = "How do I add a product to cart and update inventory?"

# Context engineering: Pull from multiple sources

contexts = {

"cart_api_docs": retrieve_docs("cart_service"),

"inventory_api_docs": retrieve_docs("inventory_service"),

"transaction_patterns": retrieve_docs("distributed_transactions"),

"error_handling": retrieve_docs("error_handling_standards"),

"code_examples": retrieve_similar_code(user_query)

}

# Structured context assembly

engineered_context = f"""

RELEVANT DOCUMENTATION:

1. Cart Service API:

{contexts['cart_api_docs']}

2. Inventory Service API:

{contexts['inventory_api_docs']}

3. Transaction Patterns:

{contexts['transaction_patterns']}

4. Similar Implementations:

{contexts['code_examples']}

Using the above context, provide a solution that:

- Maintains data consistency

- Follows our error handling standards

- Uses the correct API endpoints

- Handles edge cases

User Question: {user_query}

"""

When to Use Prompt Engineering vs Context Engineering

Use Prompt Engineering When

-

One-off tasks: Quick questions or single-purpose operations

"Summarize this article in 3 bullet points" -

Experimentation: Testing AI capabilities or trying new approaches

"Explain quantum computing using only kitchen analogies" -

Simple workflows: Tasks that don't require persistent state

"Convert this CSV data to JSON format" -

Immediate corrections: Refining output in the moment

"Make the tone more professional and reduce it to 200 words"

Use Context Engineering When

-

Application development: Building AI-powered products

Customer support chatbot with company knowledge base Medical diagnosis assistant with patient history Code review tool with project style guides -

Complex workflows: Multi-step processes with dependencies

Research assistant that maintains topic context across queries Content creation pipeline with brand guidelines Data analysis tool with dataset context -

Personalization: Adapting to user preferences over time

Learning user's coding style preferences Remembering domain expertise level Adapting communication style -

Cost optimization: Managing token usage efficiently

Summarizing old conversations Strategic context window management Caching frequently used information

Combining Both Approaches: Best Practices

The most effective AI implementations use both techniques synergistically:

Example: AI-Powered Code Review System

# Context Engineering: System setup

SYSTEM_CONTEXT = """

Project: FinTech Payment Gateway

Language: Python 3.11

Frameworks: FastAPI, SQLAlchemy, Pydantic

Standards: PEP 8, type hints required, 80% test coverage

Security: OWASP Top 10, PCI-DSS compliant

"""

# Context Engineering: User profile

USER_CONTEXT = """

Developer: Senior, 5 years experience

Strengths: API design, database optimization

Learning goals: Async programming, microservices

Previous feedback: Tends to under-comment complex logic

"""

# Context Engineering: Project-specific rules

PROJECT_RULES = """

- All monetary values use Decimal type

- Database transactions must be atomic

- API responses follow JSON:API specification

- Errors include correlation IDs for tracing

"""

# Prompt Engineering: Specific review request

def review_code(code_snippet, focus_area=None):

prompt = f"""

Review the following code changes:

```python

{code_snippet}

```

"""

# Prompt Engineering: Add specific focus if requested

if focus_area:

prompt += f"\nPay special attention to: {focus_area}\n"

prompt += """

Provide:

1. Security vulnerabilities (if any)

2. Compliance issues (if any)

3. Code quality improvements

4. Performance considerations

5. Specific line-by-line feedback

Format as:

- 🔴 CRITICAL: [must fix]

- 🟡 WARNING: [should fix]

- 🔵 SUGGESTION: [nice to have]

"""

# Combine context + prompt

full_context = f"{SYSTEM_CONTEXT}\n{USER_CONTEXT}\n{PROJECT_RULES}\n{prompt}"

return ai_model.generate(full_context)

Advanced Pattern: Dynamic Context Engineering

class ContextManager:

def __init__(self, max_tokens=8000):

self.max_tokens = max_tokens

self.persistent_context = {}

self.conversation_history = []

def add_persistent_context(self, key, value, priority="medium"):

"""Context that should always be included"""

self.persistent_context[key] = {

"content": value,

"priority": priority,

"tokens": estimate_tokens(value)

}

def add_message(self, role, content):

"""Add to conversation history"""

self.conversation_history.append({

"role": role,

"content": content,

"timestamp": datetime.now(),

"tokens": estimate_tokens(content)

})

def build_optimized_context(self, current_query):

"""Intelligently build context within token limits"""

# Start with high-priority persistent context

context_parts = []

token_count = 0

# Add critical persistent context

for key, ctx in self.persistent_context.items():

if ctx["priority"] == "high":

context_parts.append(ctx["content"])

token_count += ctx["tokens"]

# Retrieve relevant RAG context based on query

rag_results = retrieve_relevant_docs(current_query, limit=3)

rag_tokens = estimate_tokens(rag_results)

if token_count + rag_tokens < self.max_tokens * 0.5:

context_parts.append(f"RELEVANT DOCUMENTATION:\n{rag_results}")

token_count += rag_tokens

# Add recent conversation history (prioritize recent messages)

remaining_tokens = self.max_tokens - token_count - estimate_tokens(current_query) - 500 # buffer

recent_messages = []

for msg in reversed(self.conversation_history):

if token_count + msg["tokens"] < remaining_tokens:

recent_messages.insert(0, msg)

token_count += msg["tokens"]

else:

break

# If we have old messages that don't fit, summarize them

if len(recent_messages) < len(self.conversation_history):

old_messages = self.conversation_history[:-len(recent_messages)]

summary = summarize_conversation(old_messages)

context_parts.append(f"CONVERSATION SUMMARY:\n{summary}")

# Add recent messages

context_parts.append(format_messages(recent_messages))

return "\n\n".join(context_parts)

# Usage

context_mgr = ContextManager()

# Context Engineering: Set up persistent context

context_mgr.add_persistent_context(

"system_role",

"You are an expert Python developer specializing in web APIs.",

priority="high"

)

context_mgr.add_persistent_context(

"code_standards",

load_file("project_standards.md"),

priority="medium"

)

# Over multiple interactions

for user_query in user_queries:

# Build optimized context for this query

context = context_mgr.build_optimized_context(user_query)

# Prompt Engineering: Structure the specific request

prompt = f"""

{context}

Current Question: {user_query}

Provide a detailed answer with code examples if applicable.

"""

response = ai_model.generate(prompt)

# Update conversation history for future context

context_mgr.add_message("user", user_query)

context_mgr.add_message("assistant", response)

Performance and Cost Optimization

Token Management Strategies

# Bad: Repeating full context every time

for query in queries:

full_context = load_entire_documentation() # 50k tokens

prompt = f"{full_context}\n\nQuestion: {query}"

response = ai_model.generate(prompt) # Expensive!

# Good: Context engineering with smart retrieval

embedding_db = create_vector_database(documentation)

for query in queries:

relevant_context = embedding_db.retrieve(query, top_k=3) # 2k tokens

prompt = f"{relevant_context}\n\nQuestion: {query}"

response = ai_model.generate(prompt) # 25x cheaper!

Context Window Utilization

def optimize_context_usage(conversation, max_tokens=4000):

"""

Intelligently compress context while preserving essential information

"""

# Categorize messages by importance

categorized = {

"critical": [], # System prompts, key decisions

"high": [], # Recent messages (last 3 exchanges)

"medium": [], # Relevant past context

"low": [] # Summarizable content

}

# Last 3 exchanges are high priority

categorized["high"] = conversation[-6:]

# Identify critical messages with keywords

for msg in conversation[:-6]:

if contains_critical_keywords(msg):

categorized["critical"].append(msg)

else:

categorized["low"].append(msg)

# Build context within budget

context = []

token_count = 0

# Add critical messages first

for msg in categorized["critical"]:

context.append(msg)

token_count += estimate_tokens(msg)

# Add high priority (recent) messages

for msg in categorized["high"]:

context.append(msg)

token_count += estimate_tokens(msg)

# Summarize low priority if space remains

if categorized["low"] and token_count < max_tokens * 0.7:

summary = create_summary(categorized["low"])

if token_count + estimate_tokens(summary) < max_tokens * 0.8:

context.insert(len(categorized["critical"]),

{"role": "system", "content": f"Previous discussion summary: {summary}"})

return context

Real-World Use Cases

Use Case 1: Customer Support Chatbot

# Context Engineering Layer

class SupportBotContext:

def __init__(self, customer_id):

self.customer = load_customer_profile(customer_id)

self.order_history = load_orders(customer_id)

self.previous_tickets = load_support_history(customer_id)

self.kb = load_knowledge_base()

def build_context(self):

return f"""

CUSTOMER PROFILE:

- Name: {self.customer.name}

- Tier: {self.customer.tier}

- Member since: {self.customer.join_date}

- Recent orders: {summarize_orders(self.order_history[:3])}

- Previous issues: {summarize_tickets(self.previous_tickets[:2])}

SUPPORT GUIDELINES:

- {self.customer.tier} customers get {get_tier_benefits(self.customer.tier)}

- Current promotions: {get_active_promotions()}

- Escalation rules: {get_escalation_rules()}

"""

# Prompt Engineering Layer

def handle_customer_query(query, context_manager):

context = context_manager.build_context()

# Prompt engineering: Structure for optimal response

prompt = f"""

{context}

Customer Query: "{query}"

Provide a helpful response that:

1. Addresses their specific question

2. References their order history if relevant

3. Offers proactive solutions

4. Maintains a friendly, professional tone

5. Includes next steps or actions

If the query requires human escalation, explain why and what information to collect.

"""

return ai_model.generate(prompt)

# Usage

context_mgr = SupportBotContext(customer_id="12345")

response = handle_customer_query(

"I haven't received my order from last week",

context_mgr

)

Use Case 2: Content Generation Pipeline

# Context Engineering: Brand and style guide

BRAND_CONTEXT = """

Brand: TechStart (B2B SaaS company)

Voice: Professional but approachable

Tone: Confident, solution-oriented

Audience: CTOs and technical decision-makers

Avoid: Jargon, hype, superlatives without data

Always include: Concrete examples, ROI mentions

"""

# Context Engineering: SEO requirements

SEO_CONTEXT = """

- Target keyword density: 1-2%

- Include semantic keywords naturally

- H2 tags every 300-400 words

- Meta description: 150-160 characters

- Internal linking opportunities

- Alt text for images

"""

# Prompt Engineering: Content creation

def generate_blog_post(topic, keywords, word_count=1500):

prompt = f"""

{BRAND_CONTEXT}

{SEO_CONTEXT}

Topic: {topic}

Primary keyword: {keywords[0]}

Secondary keywords: {', '.join(keywords[1:])}

Target length: {word_count} words

Create a blog post following this structure:

1. Hook (1 paragraph): Start with a compelling statistic or question

2. Problem statement (2-3 paragraphs): Describe the challenge

3. Solution overview (3-4 paragraphs): Introduce your approach

4. Detailed explanation (5-6 paragraphs): Break down the solution

5. Real example (2-3 paragraphs): Show it in action

6. Implementation steps (numbered list)

7. Conclusion with CTA

Include:

- At least one data point or statistic

- A code example or technical diagram description

- 2-3 internal link suggestions [in brackets]

- Image alt text suggestions

Output the post in markdown format.

"""

return ai_model.generate(prompt)

Use Case 3: Code Documentation Generator

# Context Engineering: Project context

PROJECT_CONTEXT = """

Project: payment-gateway-api

Stack: Python 3.11, FastAPI, PostgreSQL

Architecture: Microservices with event-driven communication

Documentation standard: Google Python Style Guide

Audience: Internal developers and third-party integrators

"""

# Context Engineering: Existing codebase knowledge

def get_codebase_context(function_name):

related_functions = find_related_functions(function_name)

call_graph = analyze_call_graph(function_name)

dependencies = extract_dependencies(function_name)

return f"""

CODEBASE CONTEXT:

Related functions: {', '.join(related_functions)}

Called by: {', '.join(call_graph['callers'])}

Calls: {', '.join(call_graph['callees'])}

External dependencies: {', '.join(dependencies)}

"""

# Prompt Engineering: Documentation generation

def generate_documentation(code, function_name):

codebase_context = get_codebase_context(function_name)

prompt = f"""

{PROJECT_CONTEXT}

{codebase_context}

Generate comprehensive documentation for this function:

```python

{code}

```

Include:

1. Docstring with:

- Brief description (one line)

- Detailed explanation (2-3 sentences)

- Args: with types and descriptions

- Returns: with type and description

- Raises: any exceptions

- Example usage

2. Inline comments for:

- Complex logic

- Non-obvious decisions

- Security considerations

- Performance notes

3. Related functions developers should know about

4. Integration notes (how it fits in the larger system)

Format using Google Python Style Guide.

"""

return ai_model.generate(prompt)

Common Pitfalls and How to Avoid Them

Pitfall 1: Context Overload

Problem:

# Dumping everything into context

context = {

"full_codebase": read_all_files(), # 500k tokens!

"all_docs": load_documentation(), # 200k tokens!

"full_history": conversation_history # 100k tokens!

}

Solution:

# Strategic context selection

context = {

"relevant_code": retrieve_similar_code(query, top_k=3),

"targeted_docs": search_documentation(query, limit=5),

"recent_history": conversation_history[-10:],

"summary": summarize_older_context(conversation_history[:-10])

}

Pitfall 2: Ambiguous Prompts

Problem:

"Make this code better"

Solution:

Refactor this code to improve:

1. Error handling (add try-catch for network calls)

2. Type safety (add TypeScript types)

3. Performance (reduce O(n²) to O(n) if possible)

4. Readability (extract magic numbers to constants)

Maintain existing functionality and API contract.

Pitfall 3: Ignoring Token Costs

Problem:

# Sending full context every time

for i in range(1000):

response = ai_model.generate(

full_context + user_queries[i]

) # Expensive!

Solution:

# Cache and reuse context intelligently

cached_embedding = embed_context(full_context)

for query in user_queries:

relevant_chunks = retrieve_from_cache(cached_embedding, query)

response = ai_model.generate(relevant_chunks + query)

Pitfall 4: Static Context

Problem:

# Context never updates

SYSTEM_PROMPT = "You are a helpful assistant."

# User's needs evolve, but context doesn't

Solution:

class AdaptiveContext:

def __init__(self):

self.user_expertise = "beginner"

self.preferences = {}

def update_from_interaction(self, user_message, ai_response):

# Learn from interaction

if contains_technical_terms(user_message):

self.user_expertise = "advanced"

if "explain like I'm 5" in user_message.lower():

self.preferences["explanation_style"] = "simple"

def get_context(self):

base = "You are a helpful assistant."

if self.user_expertise == "advanced":

base += " The user is technical; use precise terminology."

if self.preferences.get("explanation_style") == "simple":

base += " Use simple analogies and avoid jargon."

return base

Measuring Success: Metrics and Evaluation

For Prompt Engineering

def evaluate_prompt_quality(prompt, expected_outputs):

metrics = {}

# Clarity score

metrics['clarity'] = calculate_clarity_score(prompt)

# Specificity score

metrics['specificity'] = len(extract_constraints(prompt))

# Output consistency

responses = [ai_model.generate(prompt) for _ in range(5)]

metrics['consistency'] = calculate_similarity(responses)

# Accuracy vs expected

metrics['accuracy'] = compare_to_expected(responses, expected_outputs)

return metrics

# Example

results = evaluate_prompt_quality(

"Translate 'hello' to French",

expected_outputs=["bonjour", "Bonjour"]

)

# Output: {clarity: 0.95, specificity: 2, consistency: 1.0, accuracy: 1.0}

For Context Engineering

def evaluate_context_quality(context_manager):

metrics = {}

# Token efficiency

metrics['token_usage'] = calculate_average_tokens(context_manager)

metrics['context_utilization'] = calculate_utilization_rate(context_manager)

# Information relevance

metrics['relevance_score'] = evaluate_context_relevance(context_manager)

# Retrieval accuracy (for RAG)

metrics['retrieval_precision'] = calculate_retrieval_precision(context_manager)

metrics['retrieval_recall'] = calculate_retrieval_recall(context_manager)

# User satisfaction

metrics['task_completion_rate'] = get_completion_rate(context_manager)

return metrics

Future Trends and Emerging Patterns

1. Hybrid Context Architectures

class HybridContextSystem:

"""

Combines multiple context engineering approaches

"""

def __init__(self):

self.vector_store = VectorDatabase() # For RAG

self.graph_db = KnowledgeGraph() # For relationships

self.conversation_memory = ConversationBuffer()

self.user_model = UserProfileManager()

def build_context(self, query):

# Vector similarity search

similar_docs = self.vector_store.search(query, top_k=3)

# Graph traversal for related concepts

related_concepts = self.graph_db.get_neighbors(

extract_entities(query)

)

# Conversation memory

relevant_history = self.conversation_memory.retrieve_relevant(query)

# User personalization

user_prefs = self.user_model.get_preferences()

# Orchestrate all sources

return orchestrate_context(

similar_docs,

related_concepts,

relevant_history,

user_prefs

)

2. Self-Improving Context Systems

class LearningContextManager:

def __init__(self):

self.context_patterns = []

self.performance_log = []

def log_interaction(self, context, prompt, response, user_feedback):

self.performance_log.append({

'context': context,

'prompt': prompt,

'response': response,

'feedback': user_feedback,

'timestamp': datetime.now()

})

# Analyze patterns

if len(self.performance_log) % 100 == 0:

self.analyze_and_improve()

def analyze_and_improve(self):

"""

Identify what context patterns lead to best results

"""

positive_examples = [

log for log in self.performance_log

if log['feedback'] == 'positive'

]

# Extract common patterns

patterns = extract_patterns(positive_examples)

# Update context strategy

self.context_patterns = patterns

# A/B test new patterns

self.run_experiments(patterns)

3. Multi-Modal Context Engineering

class MultiModalContext:

def build_context(self, query, attachments):

context_elements = []

# Text context

if 'text' in attachments:

context_elements.append(

process_text_context(attachments['text'])

)

# Image context

if 'images' in attachments:

image_descriptions = [

vision_model.describe(img)

for img in attachments['images']

]

context_elements.append(

f"Visual context: {', '.join(image_descriptions)}"

)

# Code context

if 'code' in attachments:

code_analysis = analyze_code(attachments['code'])

context_elements.append(

f"Code context: {code_analysis}"

)

# Audio context (if applicable)

if 'audio' in attachments:

transcription = speech_to_text(attachments['audio'])

context_elements.append(

f"Audio context: {transcription}"

)

return combine_multimodal_context(context_elements)

Conclusion

Understanding the distinction between prompt engineering and context engineering is crucial for anyone working with AI systems in 2025 and beyond. While prompt engineering focuses on crafting effective individual requests, context engineering takes a holistic approach to managing the information environment in which AI operates.

Key Takeaways:

- Prompt engineering is about optimization at the message level - what you ask and how you ask it

- Context engineering is about optimization at the system level - what the AI knows and remembers

- Most sophisticated AI applications require both approaches working in harmony

- Context engineering becomes increasingly important as applications scale and complexity grows

- Token efficiency and cost optimization are critical considerations for production systems

- Future systems will leverage hybrid approaches combining multiple context strategies

Best Practices Summary:

- Start with clear, specific prompts (prompt engineering)

- Build a robust context architecture for persistent applications (context engineering)

- Monitor and optimize token usage continuously

- Implement intelligent context retrieval rather than dumping everything

- Use conversation summarization for long interactions

- Personalize context based on user profiles and history

- Measure and iterate on both prompt and context effectiveness

Whether you're building a simple chatbot or a complex AI-powered application, mastering both prompt engineering and context engineering will dramatically improve your results, reduce costs, and create better user experiences.

The future of AI interaction lies not just in asking better questions, but in building smarter systems that understand context, remember what matters, and continuously adapt to user needs. By combining the tactical precision of prompt engineering with the strategic depth of context engineering, you'll be well-equipped to build the next generation of AI applications.

🤝 Need a Custom RSVP System or Dashboard?

I help businesses build tools that actually work , even on tight deadlines.

Whether you're planning an event, need internal tools, or want a custom dashboard for your team , I can help.

Reach out

📧 Email: safi.abdulkader@gmail.com | 💻 LinkedIn: @abdulkader-safi | 📱 Instagram: @abdulkader.safi | 🏢 DSRPT

Drop me a line, I’m always happy to collaborate! 🚀